Performance Video:

Final Documentation Live Coding

In our group, Mike was in charge of the music, Ruiqi worked on the visuals, and Rebecca worked on both and also controlled the midi value, and the Ascii texts.

Visual

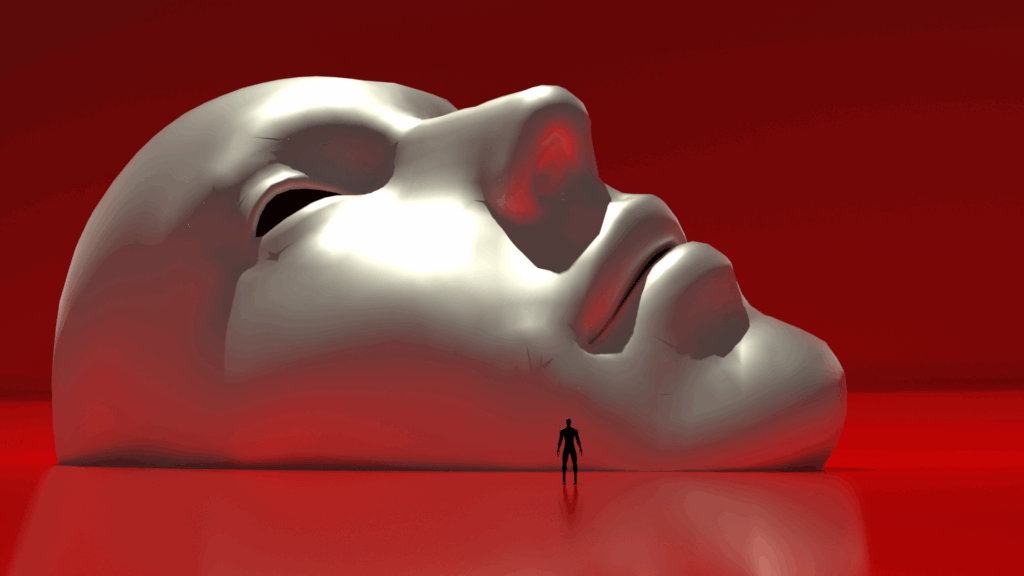

Personally, I’ve started thinking of Hydra more as a post-processing tool than a starting point for visuals. I’ve gotten a bit tired of its typical abstract look, but I still love how effortlessly it adds texture and glitchy effects to existing visuals. That’s why I chose to build the base of the visuals in Blender and TouchDesigner, then bring them into Hydra to add that extra edge.

As always, I’m drawn to a black, white, and red aesthetic—creepy and dark visuals are totally my thing. I pulled inspiration from a previous 3D animation I made, focusing on the human body, shape, and brain. In the beginning, I didn’t have a solid concept. I was just exploring faces, masks, bodies—seeing what looked “cool.” Then I started bringing some renders into Hydra and tried syncing up with what Mike was creating. We quickly realized that working separately made our pieces feel disconnected, so we adjusted things a bit to make the whole thing feel more cohesive.

At one point, I found myself overusing modulatePixelate() and colorama()—literally slapping them on everything. That’s when I knew I needed to change things up. So I went for Touch Designer and used instancing to build a rotating visual with a box, which gave the piece a nice shift in rhythm and form.

In the end, I’m proud of what I made. The visuals really reflect my style, and it felt great combining tools I’ve picked up along the way—it made me feel like a real multimedia artist. I’m also super thankful for my teammates. Everyone put in so much effort, and even though some issues popped up during the final performance, it didn’t really matter. We knew we had given it our all. Big love to the whole cyber brain scanners crew.

Here are some images and videos we made in Blender and TouchDesigner for the performance:

Audio

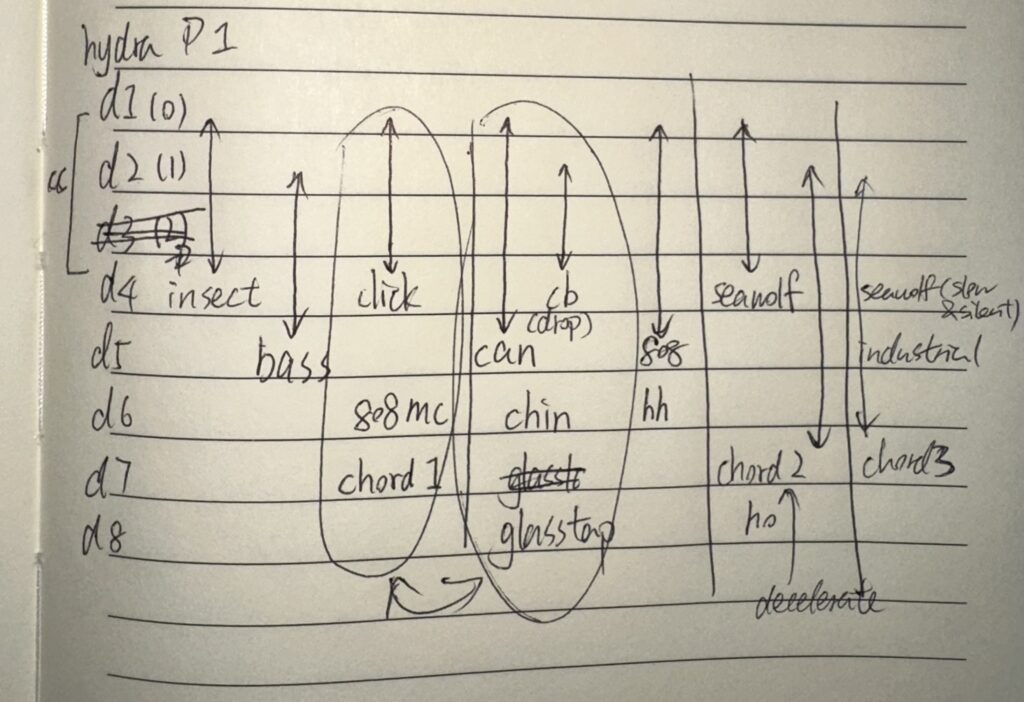

For the whole performance, we were trying to create upon several keywords: space, cyberpunk, and huge distortion. I drew inspiration from Chicago house music, glitch, and industrial music for how to make the sounds raw and wild, to correspond to the sketches for the visual.

At the early iterations of the performance, our theme was a space odyssey for cyborgs. So I thought a continuous beeping sound from a robot would fit in to start the performance. Though later we built something slightly different, we still agree this intro is effective in grasping the audience’s attention, so we chose to keep it.

For the build up, I really like the idea of using human voices to serve as a transition into the second part. And to echo with the theme, I picked a recording from crews on Discovery, a space shuttle orbiter with NASA on testing the communication system.

The aesthetic for the visual reminded me to keep the audio minimalistic. Instead of layering too many different tracks as the performance progressed, I used different variants of the main melody by adding effects like lpf, crush, and chop. The original sample for creating the main melody is a one-shot synth, and these effects helped make it sound intense, creepy and distorted.

In the second part, we wanted to make the audience feel hyped, so I focused more on the sound design for drums. The snare created a depth for the sound, and the clap can make the audience interact with us. And the glitch sample was adopted according to the pixel noise cross from the visual.

It’s really amazing to see how we have evolved as a group since the very first drum circle project, and it is a pleasure to work together and exchange ideas to make everything better.

Communication with the audience

To do live coding as a performance, we decided to use some extra methods to communicate with the audience. Typically, in a performance, the performer might communicate with the audience directly via microphone, which might undermine the consistency of the audio we are creating. Live coders might also type something in comments, which takes advantage of the nature of live coding, but the comments might be too small compared to the visual effects, and it might be hard for the audience to notice them.

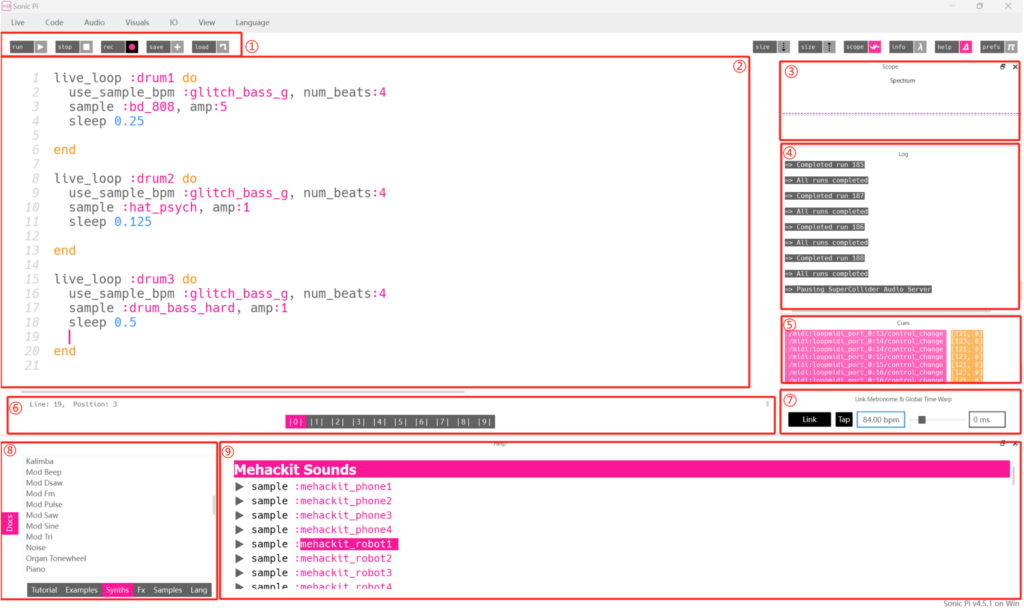

Finally, we came up with the idea of creating ASCII art. ASCII art has been a part of the coding community for a long time, especially when it comes to live coding. In one of the most well-known live coding platforms, Sonic Pi, users will encounter an ASCII art title of this software. We would like to hype up the audience by adding some ASCII art to our flok panel, which could also utilize the flok layout and let those who don’t read code pay attention to the code panel.

We really managed to hype up the audience and express our big thanks and love to the community that has been supporting us throughout this semester.

👽👽👽💻🧠❤❤❤