Hi, flock-ers! Or should we say, hackers? Happy finals season — enjoy our documentation:

How We Started, aka the Chaotic Evil:

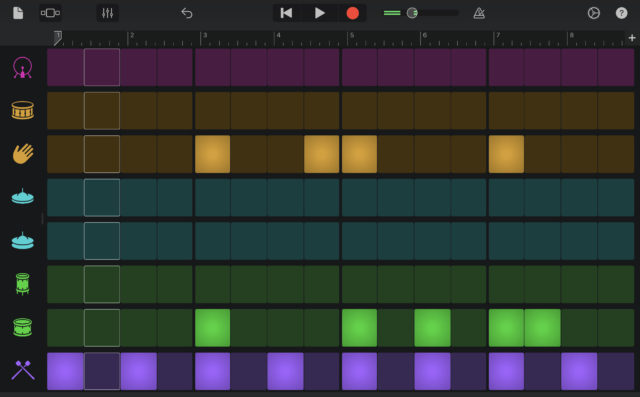

During the first two weeks of our practice, we approached the final project with the drum circle mindset. For every meeting, we would split into Hydra (2 ppl) and Tidal (2 ppl) teams and improvise, creating something new and enjoying it. When it came time to show the build-ups and drops, we struggled, because we had a lot of sounds and visuals going on separately, but not in one sequence. One evening, we created a mashup which later turned into our first build-up and drop music, yet without cohesive visuals or any other connecting tissue.

How We Proceeded, aka Narrowing the Focus:

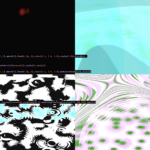

A week later, Amina and Shreya were still improvising with Tidal, perfecting the first build-up and drop along with composing the second one, while Dania and Thaís were working on visualizing the first build-up and drop. One moment, Shreya was modifying Amina’s code, and a happy accident happened. That turned into our second drop, with a little magic of chance at 11 PM in the IM Lab.

At the same time, we also decided to narrow our focus only to certain sounds or visuals, critically choosing the ones that would go with our theme and not sound disintegrated or chaotic.

The Connecting Tissue, aka Blobby and Xavier:

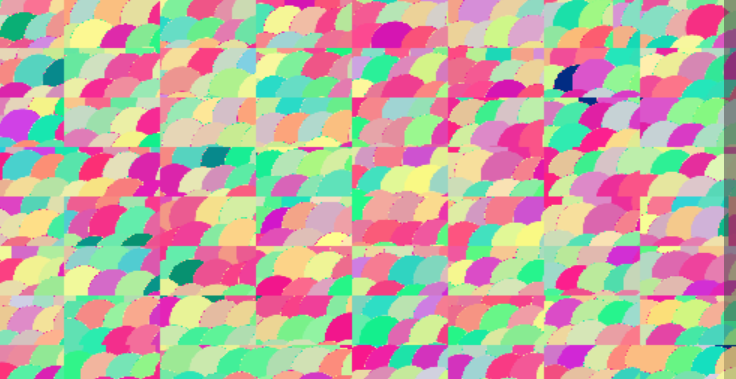

While working on the visuals, we decided to use simple shapes to make it as engaging and as aesthetically pleasing as possible. We narrowed down the visuals that we made during our meetings into 2 sections, circles and lines, which we later decided to name Blobby and Xavier respectively. The choice of circles growing was inspired by our dominating sounds – ‘em’ and ‘tink’ in the first build-up. When we thought of these sounds, Blobby is the visual we imagined. Similarly, Xavier was given its form. Dania and Thaís came up with this names.

Initially, we wanted to tell the story of the interaction between Blobby and Xavier but the sounds we had did not quite match up with the stories we had in mind. From there, we started to experiment with different ways we could convey a story that had both Blobby and Xavier. Since we already had the sound, we started thinking of what visuals looked best with the sound that we had, and then it all started coming together almost too perfectly.

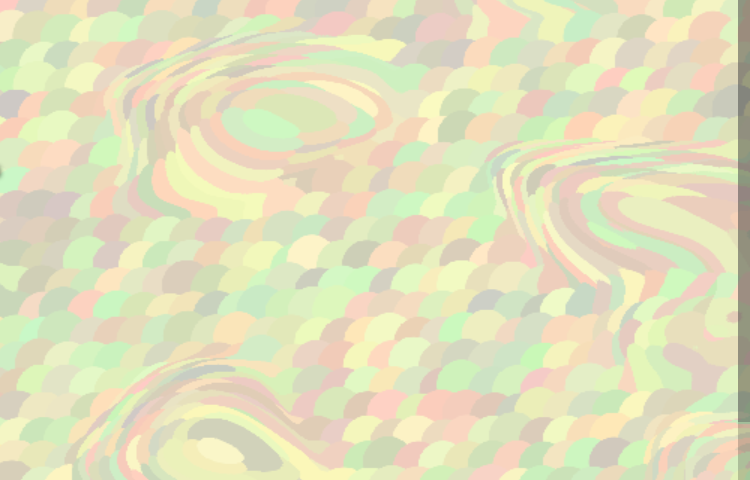

We had the sounds and visuals for our 2 build-ups and drops but we needed some way to connect the two. Because Blobby and Xavier had no connection with each other, we tried finding different ways to connect them so the composition would look cohesive. This is when we decided to stick with one color scheme throughout the entire composition. We chose a very bright and colorful palette because that’s the vibe we got from the sounds we created. To transition from Blobby to Xavier, Dania came up with the idea of filling the entire screen with circles after the first drop. The circles would then overlap and create what looks like an osc() that we can then slowly shrink till it becomes a line that can then be modulated to get to xavier. Although this sounded like a wonderful idea, it was a painful one to execute. But in the end, we managed to do it and the result was absolutely lovely <3

Guys, let’s…. aka Aaron’s “SO”:

As we were playing around with the story behind Blobby and Xavier, Shreya and Dania came up with an idea… “Guys, what if we add Aaron’s voice into the performance?” Of course we could not resist, especially when Thaís happened to have a few of his famous lines recorded. This idea quickly became the highlight of our performance and provided us with a way to transition between the different buildups and drops we created while also adding some spice and flavour to our performance.

The Midi Magic aka How It All Came Together:

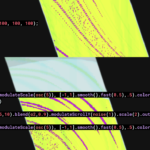

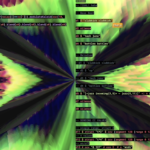

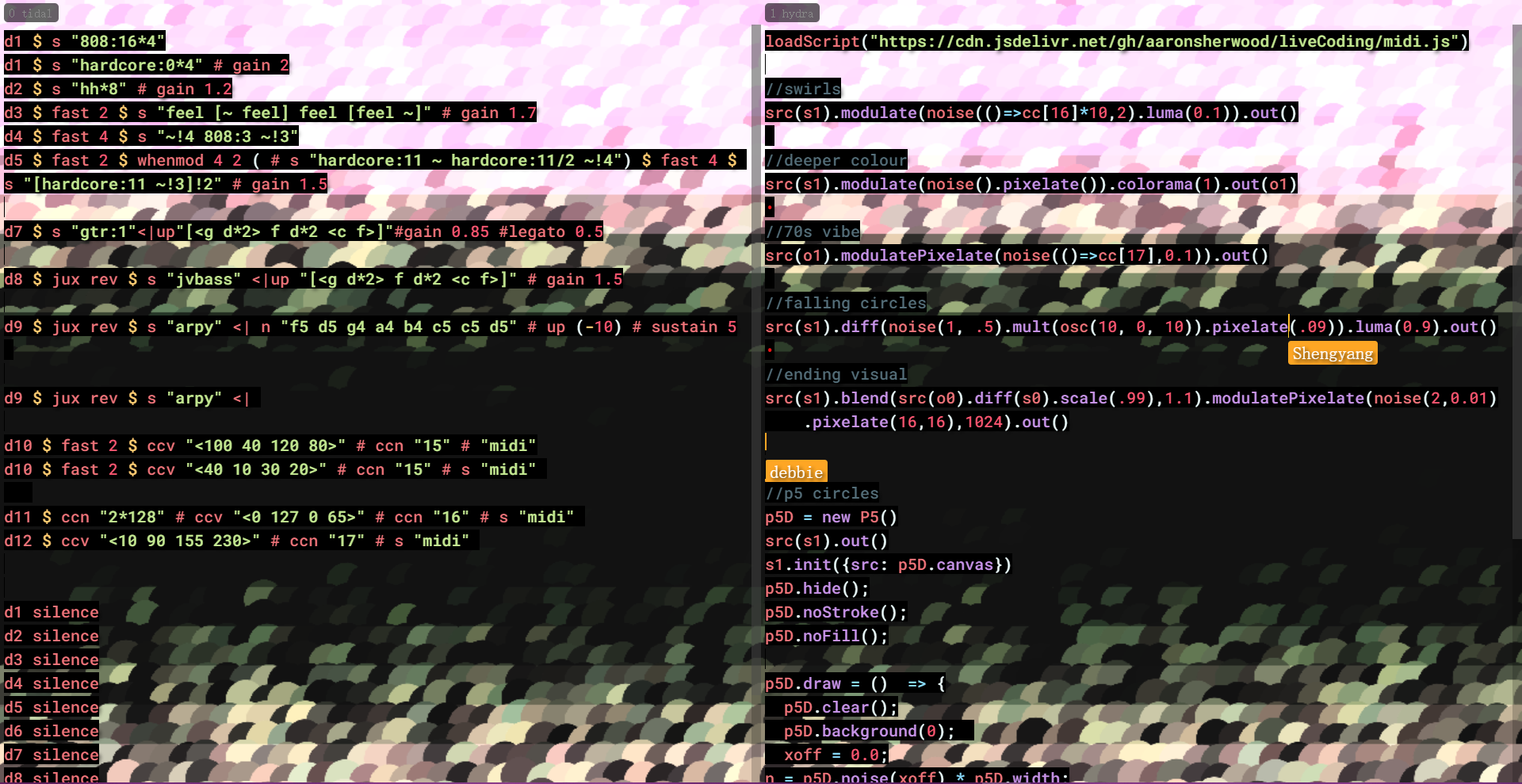

We had the sounds and the visuals but we still needed some way to connect the 2. This is where the midi magic happened. Because we had a slow start to the music, we decided to map each sound to a visual element, using midi, so that each sound change can be accompanied by a visual change and it doesn’t get monotonous either on the sonic or the visual side. But after the piece builds up, we thought it would be too much to have each sound correspond to a visual element so we grouped the sounds we had together into different sections, for example, all the drum sounding sounds would correspond to one specific visual element. For example, clubkick and soskick would both modulate Blobby instead of one of them modulating it and the other having some other effect. We also thought it would be better to have the dominant sounds have the biggest visual change – something that was inspired by the various artists’ work we saw in class. We applied the same concept to Xavier. While linking the sounds with the visuals, we also put a lot of thought into what the visual effect of each sound should be. We used midi to map the beat of the sounds to the visual change and also to automate the first half of the visuals and somehow, we ended up using around 25 different midi channels (some real midi magic happened there).

one_min_pls(), aka the story of our composition

Once we had our composition ready, now was time for the performance, after all, it is live coding! So we had to decide on how we wanted our composition to look on screen, how to call the functions or evaluate the line, while also having some story. One thing all of us were super keen on was for it to have a story, and not random evaluation of lines. After much discussion, we decided to make the composition a story of the composition itself – how it came to life and how we coded it. To do this, we decided to make the performance a sort of conversation between us, where the function name would sometimes correspond to something we would usually say while triggering that specific block of code (ie. i_hope_this_works() for p5 bec it would usually crash) and other times they would be named based on what we were saying at the time (ie. i_can_see_so()). Because the function names were based on our conversations, it was really easy (and fun) to follow and remember – all we had to do was respond to each other as we usually would. It was a grape 🍇 bonding experience

Reflection(do we include this? ) CAUTION: the amount of cheese here is life threatening:

Our group was very chaotic most of the time, but that somehow seemed to work perfectly for us and we’re glad we were able to somehow showcase some of this chaos and cohesiveness through our composition.Through our composition our own personalities are very prominent. Everytime we see a qtrigger, we think of Shreya. A clubkick reminds us of Thaís and the drop reminds us of how we accidentally made our first drop very late at night and couldn’t stop listening to it. The party effect after the drop reminds us of Amina (i’m not sure why?) and everytime we hear our mashup we start dying from laughter. At times we could even see the essence of ourselves through this composition. What we really liked the most about it is that we would usually get excited to work on it – it didn’t feel like a chore but rather it felt like hanging out with some friends and jamming.

P.S:

The debate has finally been settled by vote from the class, hydra-tidal supremacy hehe

– someone who is obviously wrong

No it hasn’t, I yielded because of Shreya’s mouse, nothing else.

– the voice of reason

Documentation video of our (live) performance:

Documentation video of our (not SOOO live) performance:

Final Hydra script of our performance:

https://github.com/daniaezz/liveCoding/blob/main/aaaaaaaa.js

Final Tidal start-up code of our performance:

https://github.com/daniaezz/liveCoding/blob/main/finalCompositionFunctions.tidal

The evolution of our code can be seen here:https://github.com/ak7588/liveCoding