LiveCodeLab is a web-based livecoding environment for real-time 3d visuals and sample-based sequencing. It was created and released by Davide Della Casa in April 2012, and then from November 2012 co-authored by Davide Della Casa and Guy John.

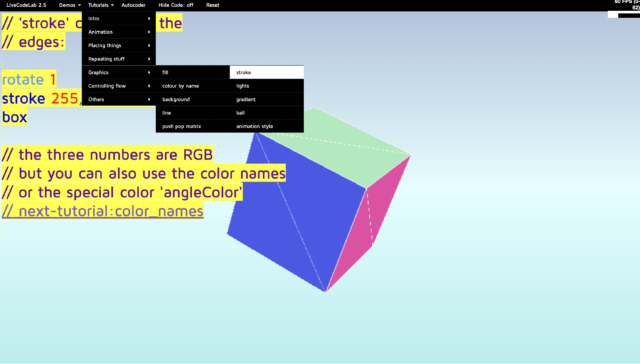

Motive for the development of LCL is to gather good elements in livecoding environments, and ground the new environment on a new language that is compact, expressive, and immediately accessible to an audience with low computer literacy. Technically, LiveCodeLab has been directly influenced by Processing, Jsaxus, Fluxus, and Flaxus. The language has changed from JavaScript -> CoffeeScript -> Current language (LiveCodeLang).

The distinct characteristics bring LCL different value in live performances and education. Audience can understand the code better (if they want), and users ranging from young children to adults, with different backgrounds can all easily access.

In addition, the web provides a clean flow of tutorial, where starters can learn from scratch quite quickly.

However, I did find gap between the tutorial and demos. More advanced manipulations are not included in the tutorial so sometimes it’s hard to comprehend code for the demos.

In sum, I have found Livecodelab:

- Straightforward & Compact

- E.x. rotate red box

- “On-the-fly”: as you type, things pop up

- transient states -> “constructive” nature of the performance

- though simpler, more liveness*

- 3D, but can also create 2D pattern by tricks (paintOver, zooming in etc.)

- With limited manipulation (both audio and visual)

Apart from the tutorial, valuable things I found:

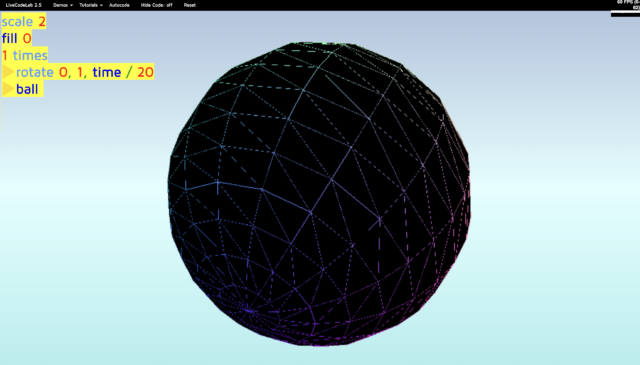

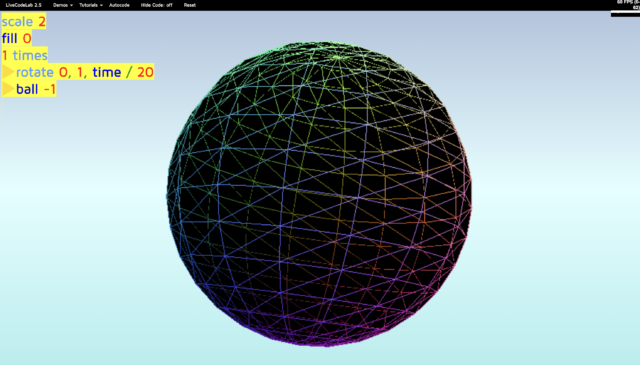

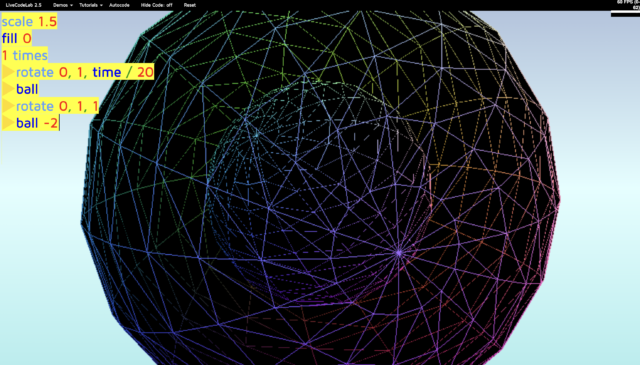

- the number after object:

Absolute value controls scale, negative numbers make the object transparent.

- “pulse” as good expression when accompanied by audio

Supplementary Materials:

Trials:

References

Davide Della Casa, [personal website], http://www.davidedc.com/livecodelab-2012

Della Casa, G. John, LiveCodeLab 2.0 and its language LiveCodeLang, ACM SIGPLAN, FARM 2014 workshop.

LiveCodeLab, https://livecodelab.net/