Visuals (Aadhar & Chenxuan)

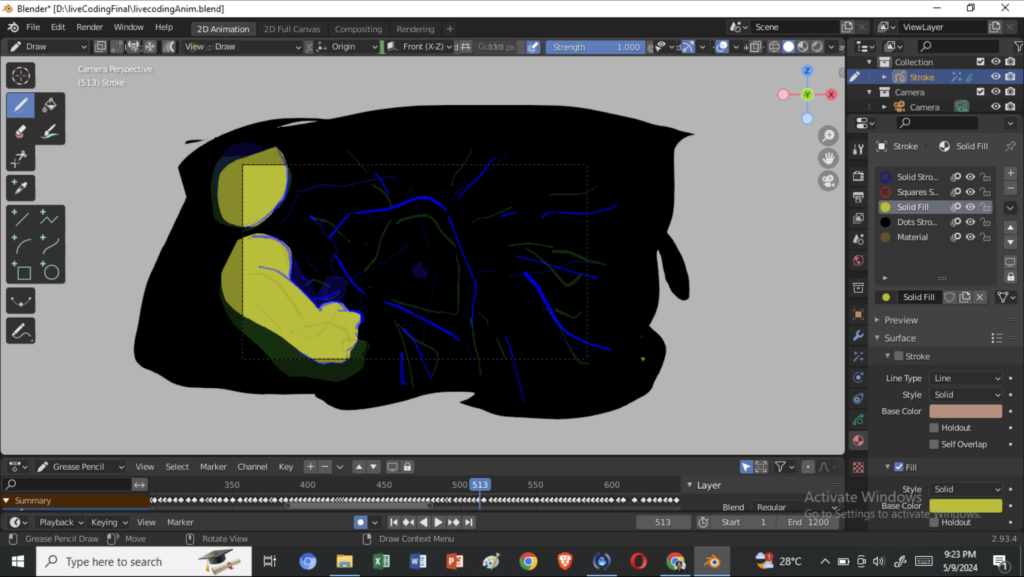

The idea was to combine hydra visuals with an animation overlayed on top. Aadhar drew a character animation of a guy falling, which was used on top to drive the story and the sounds. Blender was used to draw the frames and render the animation.

The first issue that came up with overlaying the animation was turning the background of the animated video transparent. We tried really hard to get a video with a transparent background into hydra, but that didn’t work because the background showed up black at the end no matter what we did. Then, we used hydra code itself to turn the background transparent, using its luma function that was relatively easier to do.

Then, because we had a video that was 2 minutes long, we couldn’t get all of it into hydra. Apparently, hydra only accepts 15-second-long clips. So we had to chop it up into eight 15-second-long pieces and trigger each video at the right time to make the animation flow. However, it wasn’t as smooth as we thought it would be. It took a lot of rehearsals for us to get used to triggering the videos at the right time – which didn’t come off even till the end. The videos were looping before we could trigger the next one (which we tried our best to cover and the final performance really reflected it). Other than the animation itself, different shaders were used to create the background of the thing.

Chenxuan was responsible for this part of the project. We created a total of six shaders. Notably, the shader featuring the colorama effect appears more two-dimensional, which aligns better with the animation style. This is crucial because it ensures that the characters and the background seem to exist within the same layer, maintaining visual coherence.

However, we encountered several issues with the shaders, primarily due to a variety of errors during loading. Each shader seems to manifest its unique problem. For example, some shaders experience data type conflicts between integers and floats. Others have issues with multiple declarations of ‘x’ or ‘y’ variables, which cause conflicts within the code.

Additionally, the shaders display inconsistently across different platforms. On Pulsar, they perform as expected, but on Flok, the display splits into four distinct windows, which complicates our testing and development process.

Code for the visuals can be found here.

Audio (Ian)

The audio is divided into three main parts: one before the animation, one during the animation, and one after the animation. The part before the animation features a classic TidalCycles buildup—the drums stack up, a simple melody using synths comes in, and variation is given by switching up the instruments and effects. This part lasts for roughly a minute, and its end is marked by a sample (Transcendence by Nujabes) quietly coming in. Other instruments fade out as the sample takes center stage. This is when the animation starts to come in, and the performance transitions to the second part.

The animation starts by focusing on the main character’s closed eyes. The sample, sounding faraway at first, grows louder and more pronounced as the character opens their eyes and begins to fall. This is the first out of six identifiable sections within this part, and this continues until a moment in which the character appears to become emboldened with determination—a different sample (Sunbeams by J Dilla) comes in here. This second part continues until the first punch, with short samples (voice lines from Parappa the Rapper) adding to the conversation from this point onwards.

Much of the animation features the character falling through the sky, punching through obstacles on the way down. We thought the moments where these punches occur would be great for emphasizing the connection between the audio and the visuals. After some discussion, we decided that we would achieve this by switching both the main sample and the visuals (using shaders) with each punch. Each punch is also made audible through a punching and crashing sound effect. As there are three punches total, the audio changes three times from the aforementioned second part. These are the third to fifth sections (one sample from Inspiration of My Life by Citations, two samples from 15 by pH-1).

The character eventually falls to the ground, upon which the animation rewinds quickly and the character falls back upwards. A record scratch sound effect is used to convey the rewind, and a fast-paced, upbeat sample (Galactic Funk by Casiopea) is used to match the sped-up footage. This is the sixth and final section of this part. The animation ends by focusing back on the character’s closed eyes, and everything fades out to allow for the final part to come in.

The final part seems to feature another buildup. A simple beat is made using the 808sd and 808lt instruments. A short vocal(ish?) sample is then played a few times with varying effects, as if to signal something left to be said—and indeed there is.

Code for the audio and the lyrics can be found here.

The spirit of Live Coding:

Embrace the spontaneity

Today’s me might fail me

But there’s always tomorrow

나도 기다려져 내일이!