Final Project Documentation: Jun Ooi, Raya Tabassum, Maya Lee, Juan Manuel Rodriguez Zuluaga

Video Link: https://drive.google.com/file/d/15Rmvtz3kL-ofHJQ6K3GiCsjlAorl1_80/view?usp=drive_link

An important element of the practice of Live Coding is how it challenges our current perspective and use of technology. We’ve talked about it through the lens of notation, liveliness(es), and temporality, among others. Our group, the iCoders, wanted to explore this with our final project. We discussed how dependent we are on our computers, many of us Macs. What is our input, and what systems are there to receive this? What does 4 people editing a Google document simultaneously indicate about the liveliness of our computer? With this in mind, we decided to construct a remix of sounds in Apple computers. These are some of the sounds we hear the most on our day to day, and we thought it would be fun to take them out of context, break their patters, and dance to them. Perhaps a juxtaposition between the academic and the non-academic, the technical and the artsy. We wanted to make it be an EDM remix because this is what we usually hear at parties, and believe that the style would work really well. We began creating and encountered important inspirations throughout the process.

- Techno:

- Diamonds on my Mind: https://open.spotify.com/album/4igCnwKUaJNezJWHlWv8Bs

- Rave: https://open.spotify.com/track/2OQLFQns8ZjTTTOyQHKLhi

During one of our early meetings, we had the vast libary of apple sounds, but were struggling a bit with pulling something together. We decided to look if someone had done something similar to our idea and found this video by Leslie Way which helped us A LOT.

- Mac Remix: https://www.youtube.com/watch?v=6CPDTPH-65o

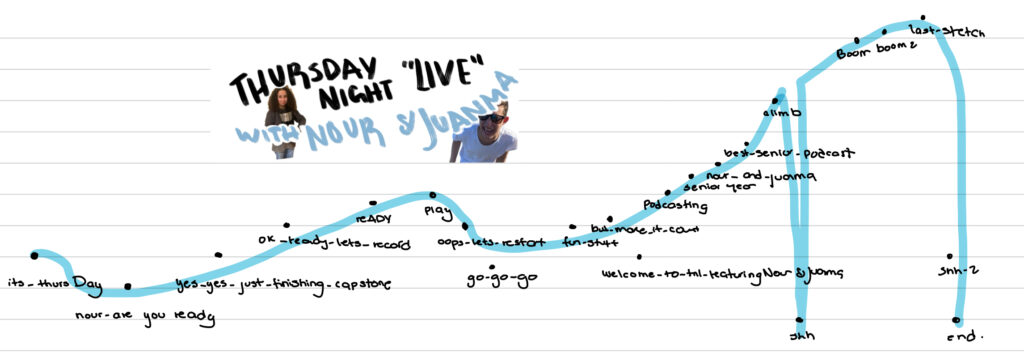

Compositional Structure: From the very beginning we wanted our song to be “techno.” Nevertheless, once we found Leslie Way’s remix, we thought that the vibe that remix goes for is very fitting to Apples’ sounds. Upon testing and playing around with sounds a lot, we settled on the idea of having a slow, “cute” beginning using only (or mostly) Apple sounds. Here, the computer would get slowly overwhelmed with us from all the IM assignments and tabs open. The computer would then crash, and we would introduce a “techno” section. Then, we’d try to simulate a bit the songs that we’d been listening to. After many many many iterations, we reached a structure like this:

The song begins slow, grows, there is a shutdown, it quickly grows again and there is a big drop. Then the elements slowly fade out, and we turn off the computer because we “are done with the semester.”

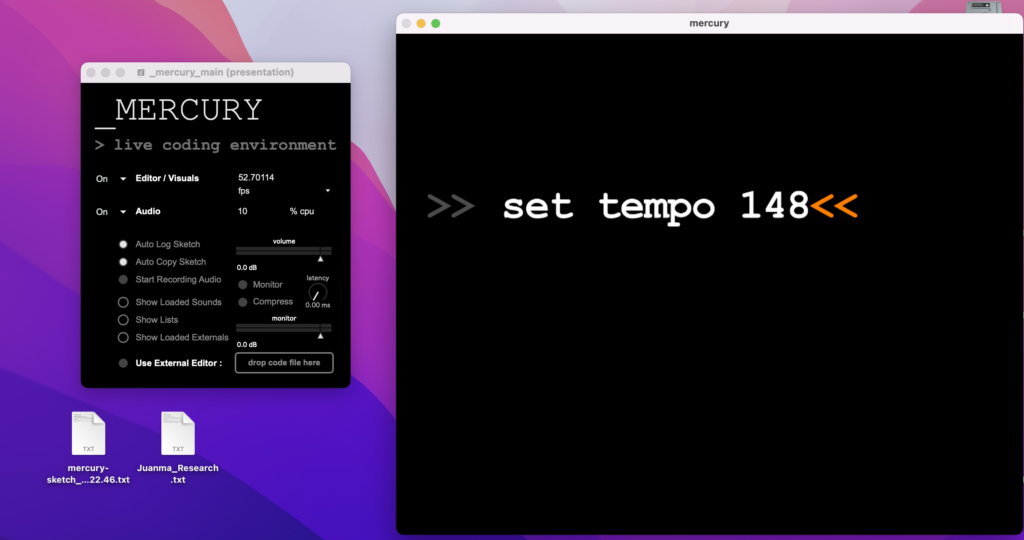

Sound: The first thing we did once we chose this idea was find a library of Apple sounds. We found many of them on a webpage and added those we considered necessary from YouTube. We used these, along with the Dirt-Samples (mostly for drums) to build our performance. We used some of the songs liked above to mirror the beats and instruments, but also a lot of experimentation. Here is the code for our Tidal Cycles sketch:

hush

boot

one

two

three

four

do -- 0.5, 1 to 64 64

d1 $ fast 64 $ s "apple2:11 apple2:10" -- voice note sound

d2 $ fast 64 $ s "apple2:0*4" # begin 0.2 # end 0.9 # krush 4 # crush 12 # room 0.2 # sz 0.2 # speed 0.9 # gain 1.1

once $ ccv "6" # ccn "3" # s "midi"

d16 $ ccv "10*127" # ccn "2" # s "midi";

shutdown

reboot

talk

drumss

once $ s "apple3" # gain 2

macintosh

techno_drums

d11 $ silence -- silence mackintosh

buildup_2

drop_2

queOnda

d11 $ silence -- mackin

d11 $ fast 2 $ striate 16 $ s "apple3*1" # gain 1.3 -- Striate

back1

back2

back3

back4

panic

hush

-- manually trigger mackin & tosh to spice up sound

once $ s "apple3*1" # begin 0.32 # end 0.4 # krush 3 # gain 1.7 -- mackin

once $ s "apple3*1" # begin 0.4 # end 0.48 # krush 3 # gain 1.7 -- tosh

-- d14 $ s "apple3*1" # legato 1 # begin 0.33 # end 0.5 # gain 2

-- once $ s "apple3*1" # room 1 # gain 2

hush

boot = do{

once $ s "apple:4";

once $ ccv "0" # ccn "3" # s "midi";

}

one = do {

d1 $ slow 2 $ s "apple2:11 apple2:10"; -- voice note sound

d16 $ slow 2 $ ccv "<30 60> <45 75 15>" # ccn "2" # s "midi";

once $ slow 1 $ ccv "1" # ccn "3" # s "midi";

}

two = do {

d3 $ qtrigger $ filterWhen (>=0) $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.5 # hpf 4000 # krush 4;

xfadeIn 4 2 $ slow 2 $ qtrigger $ filterWhen (>=0) $ s "apple2:7 apple2:8 <~ {apple2:7 apple2:7}> apple2:7" # gain 0.8 # krush 5 # lpf 3000;

d16 $ ccv "15 {40 70} 35 5" # ccn "2" # s "midi";

once $ ccv "2" # ccn "3" # s "midi";

}

three = do {

xfadeIn 2 2 $ qtrigger $ filterWhen (>=0) $ s "apple2:0*4" # begin 0.2 # end 0.9 # krush 4 # crush 12 # room 0.2 # sz 0.2 # speed 0.9 # gain 1.1;

xfadeIn 12 2 $ qtrigger $ filterWhen (>=2) $ slow 2 $ s "apple:7" <| note (arp "up" "f4'maj7 ~ g4'maj7 ~") # gain 0.8 # room 0.3;

xfadeIn 6 2 $ qtrigger $ filterWhen (>=3) $ s "apple2:11 ~ <apple2:10 {apple2:10 apple2:10}> ~" # krush 3 # gain 0.9 # lpf 2500;

d16 $ ccv "30 ~ <15 {15 45}> ~" # ccn "2" # s "midi";

once $ ccv "3" # ccn "3" # s "midi";

}

four = do {

-- d6 $ s "bd:4*4";

d5 $ qtrigger $ filterWhen (>=0) $ s "apple2:2 ~ <apple2:2 {apple2:2 apple2:2}> ~" # krush 16 # hpf 2000 # gain 1.1;

xfadeIn 11 2 $ qtrigger $ filterWhen (>=1) $ slow 2 $ "apple:4 apple:8 apple:9 apple:8" # gain 0.9;

d16 $ qtrigger $ filterWhen (>=0) $ slow 2 $ ccv "10 20 30 40 ~ ~ ~ ~ 60 70 80 90 ~ ~ ~ ~" # ccn "2" # s "midi";

once $ ccv "4" # ccn "3" # s "midi";

}

buildup = do {

d11 $ silence;

once $ ccv "5" # ccn "3" # s "midi";

d1 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 2, s "apple:4*1" # cut 1),

(2, 3, s "apple:4*2" # cut 1),

(3, 4, s "apple:4*4" # cut 1),

(4, 5, s "apple:4*8" # cut 1),

(5, 6, s "apple:4*16" # cut 1)

] # room 0.3 # speed (slow 6 (range 1 2 saw)) # gain (slow 6 (range 0.9 1.3 saw));

d6 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 2, s "808sd {808lt 808lt} 808ht 808lt"),

(2,3, fast 2 $ s "808sd {808lt 808lt} 808ht 808lt"),

(3,4, fast 3 $ s "808sd {808lt 808lt} 808ht 808lt"),

(4,6, fast 4 $ s "808sd {808lt 808lt} 808ht 808lt")

] # gain 1.4 # speed (slow 6 (range 1 2 saw));

d12 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 1, slow 2 $ s "apple:7" <| note (arp "up" "f4'maj7 ~ g4'maj7 ~")),

(1, 2, slow 2 $ s "apple:7*2" <| note (arp "up" "f4'maj7 c4'maj7 g4'maj7 c4'maj7")),

(2, 3, fast 1 $ "apple:7*4" <| note (arp "up" "f4'maj7 c4'maj7 g4'maj7 c4'maj7")),

(3, 4, fast 1 $ s "apple:7*4" <| note (arp "up" "f4'maj7 c4'maj7 g4'maj7 c4'maj7")),

(4, 6, fast 1 $ s "apple:7*4" <| note (arp "up" "f4'maj9 c4'maj9 g4'maj9 c4'maj9"))

] # cut 1 # room 0.3 # gain (slow 6 (range 0.9 1.3 saw));

d16 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 2, ccv "20"),

(2, 3, ccv "50 80" ),

(3, 4, ccv "40 60 80 10" ),

(4, 5, ccv "20 40 60 80 10 30 50 70" ),

(5, 6, ccv "20 40 60 80 10 30 50 70 5 25 45 65 15 35 55 75" )

] # ccn "2" # s "midi";

}

shutdown = do {

once $ s "print:10" # speed 0.9 # gain 1.2;

once $ ccv "7" # ccn "3" # s "midi";

d1 $ silence;

d2 $ qtrigger $ filterWhen (>=1) $ slow 4 $ "apple2:0*4" # begin 0.2 # end 0.9 # krush 4 # crush 12 # room 0.2 # sz 0.2 # speed 0.9 # gain 1.1;

d3 $ silence;

d4 $ silence;

d5 $ silence;

d6 $ silence;

d7 $ silence;

d8 $ silence;

d9 $ silence;

d10 $ silence;

d11 $ silence;

d12 $ silence;

d13 $ silence;

d14 $ silence;

d15 $ silence;

}

reboot = do {

once $ s "apple:4" # room 1.4 # krush 2 # speed 0.9;

once $ ccv "0" # ccn "3" # s "midi";

}

talk = do {

once $ s "apple3:1" # begin 0.04 # gain 1.5;

}

drumss = do {

d12 $ silence;

d13 $ silence;

d5 $ silence;

d6 $ fast 2 $ s "808sd {808lt 808lt} 808ht 808lt" # gain 1.2;

d8 $ s "apple2:3 {apple2:3 apple2:3} apple2:3!6" # gain (range 1.1 1.3 rand) # krush 4 # begin 0.1 # end 0.6 # lpf 2000;

d7 $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.3 # lpf 2500 # hpf 1500 # krush 3;

d9 $ s "feel:5 ~ <feel:5 {feel:5 feel:5}> ~" # krush 3 # gain 0.8;

d10 $ qtrigger $ filterWhen (>=0) $ degradeBy 0.1 $ s "bd:4*4" # gain 1.5 # krush 4;

d11 $ qtrigger $ filterWhen (>=0) $ s "hh*8";

xfadeIn 14 2 $ "jvbass ~ <{jvbass jvbass} {jvbass jvbass jvbass}> jvbass" # gain (range 1 1.2 rand) # krush 4;

xfadeIn 15 1 $ "bassdm ~ <{bassdm bassdm} {bassdm bassdm bassdm}> bassdm" # gain (range 1 1.2 rand) # krush 4 # delay 0.2 # room 0.3;

d10 $ ccv "1 0 0 0 <{1 0 1 0} {1 0 1 0 1 0}> 1 0" # ccn "4" # s "midi";

once $ ccv "8" # ccn "3" # s "midi";

}

dancyy = do {

d1 $ s "techno:4*4" # gain 1.2;

d2 $ degradeBy 0.1 $ fast 16 $ s "apple2:13" # note "<{c3 d4 e5 f2}{g3 a4 b5 c2}{d3 e4 f5 g2}{a3 b4 c5 d2}{e3 f4 g5 e2}{f3 f4 f5 f2}{a3 a4 a5 a2}{b3 b4 b5 b2}>" # gain 1.2;

}

macintosh = do {

d11 $ s "apple3*1" # legato 1 # begin 0.33 # end 0.5 # gain 2;

once $ s "apple:4";

once $ ccv "7" # ccn "3" # s "midi";

}

techno_drums = do {

once $ ccv "10" # ccn "3" # s "midi";

d14 $ ccv "1 0 ~ <{1 0 1 0} {1 0 1 0 1 0}> 1 0" # ccn "2" # s "midi";

d6 $ s "techno*4" # gain 1.5;

d7 $ s " ~ hh:3 ~ hh:3 ~ hh:3 ~ hh:3" # gain 1.5;

d8 $ fast 1 $ s "{~ apple2:7}{~ hh}{~ ~ hh hh}{ ~ hh}" # gain 1.3;

d9 $ fast 1 $ s "{techno:1 ~ ~ ~}{techno:1 ~ ~ ~}{techno:1 techno:3 ~ techno:1}{~ techno:4 techno:4 ~} " # gain 1.4;

d4 $ "jvbass ~ <{jvbass jvbass} {jvbass jvbass jvbass}> jvbass" # gain (range 1 1.2 rand) # krush 4;

d15 $ "bassdm ~ <{bassdm bassdm} {bassdm bassdm bassdm}> bassdm" # gain (range 1 1.2 rand) # krush 4 # delay 0.2 # room 0.3;

}

buildup_2 = do {

d7 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 11, s " ~ hh:3 ~ hh:3 ~ hh:3 ~ hh:3" # gain (slow 11 (range 1.5 1.2 isaw))),

(11, 12, silence)

];

d8 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 11, s "{~ apple2:7}{~ hh}{~ ~ hh hh}{ ~ hh}" # gain (slow 11 (range 1.3 1 isaw))),

(11, 12, silence)

];

d9 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 11, s "{techno:1 ~ ~ ~}{techno:1 ~ ~ ~}{techno:1 techno:3 ~ techno:1}{~ techno:4 techno:4 ~}" # gain (slow 11 (range 1.5 1.2 isaw))),

(11, 12, silence)

];

d4 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 11, s "jvbass ~ <{jvbass jvbass} {jvbass jvbass jvbass}> jvbass" # gain (range 1 1.2 rand) # krush 4),

(11, 12, silence)

];

d13 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 11, s "bassdm ~ <{bassdm bassdm} {bassdm bassdm bassdm}> bassdm" # gain (range 1 1.2 rand) # krush 4 # delay 0.2 # room 0.3),

(11, 12, silence)

];

d11 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 1, s "apple3" # cut 1 # begin 0.3 # end 0.5 # gain 1.7),

(1, 2, silence),

(2, 3, s "apple3" # cut 1 # begin 0.3 # end 0.5 # gain 1.9),

(3, 4, silence),

(4, 5, s "apple3" # cut 1 # begin 0.3 # end 0.5 # gain 2.1),

(5, 6, silence),

(6, 7, s "apple3" # cut 1 # begin 0.3 # end 0.5 # gain 2.1),

(7, 8, silence),

(11, 12, s "apple3" # cut 1 # begin 0.3 # end 0.5 # gain 2.3)

];

d1 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 5, s "apple:4*1" # cut 1),

(5, 7, s "apple:4*2" # cut 1),

(7, 8, s "apple:4*4" # cut 1),

(8, 9, s "apple:4*8" # cut 1),

(9, 10, s "apple:4*16" # cut 1)

] # room 0.3 # gain (slow 10 (range 0.9 1.3 saw));

d2 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 5, s "sn*1" # cut 1),

(5, 7, s "sn*2" # cut 1),

(7, 8, s "sn*4" # cut 1),

(8, 9, s "sn*8" # cut 1),

(9, 11, s "sn*16" # cut 1)

] # room 0.3 # gain (slow 11 (range 0.9 1.3 saw)) # speed (slow 11 (range 1 2 saw));

d16 $ qtrigger $ filterWhen (>=0) $ seqP [

(0, 5, ccv "10"),

(5, 7, ccv "5 10" ),

(7, 8, ccv "5 10 15 20" ),

(8, 9, ccv "5 10 15 20 25 30 35 40" ),

(9, 10, ccv "40 45 50 55 60 65 70 75 80 85 90 95 10 110 120 127" )

] # ccn "6" # s "midi";

once $ ccv "11" # ccn "3" # s "midi";

}

queOnda = do {

d11 $ fast 4 $ s "apple3" # cut 1 # begin 0.3 # end 0.54 # gain 2;

d14 $ ccv "1 0 ~ <{1 0 1 0} {1 0 1 0 1 0}> 1 0" # ccn "2" # s "midi"

} -- que onda!

drop_2 = do {

d5 $ qtrigger $ filterWhen (>=0) $ s "apple2:2 ~ <apple2:2 {apple2:2 apple2:2}> ~" # krush 8 # gain 1.1;

d7 $ qtrigger $ filterWhen (>=0) $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.6 # lpf 3500 # hpf 1000 # krush 3;

d8 $ qtrigger $ filterWhen (>=0) $ s "apple2:3!6 {apple2:3 apple2:3} apple2:3" # gain (range 0.8 1.1 rand) # krush 16 # begin 0.1 # end 0.6 # lpf 400;

d10 $ qtrigger $ filterWhen (>=0) $ degradeBy 0.1 $ s "apple2:0*8" # begin 0.2 # end 0.9 # krush 4 # room 0.2 # sz 0.2 # gain 1.3;

d12 $ fast 1 $ s "{~ hh} {~ hh} {~ ~ hh hh} {~ hh}" # gain 1.3;

d13 $ fast 1 $ s "{techno:1 ~ ~ ~}{techno:1 ~ ~ ~}{techno:1 techno:3 ~ techno:1}{~ techno:4 techno:4 ~} " # gain 1.4;

d4 $ s "realclaps:1 realclaps:3" # krush 8 # lpf 4000 # gain 1;

d15 $ qtrigger $ filterWhen (>=0) $ s "apple:0" <| note ("c4'maj ~ c4'maj7 ~") # gain 1.1 # room 0.3 # lpf 400 # hpf 100 # delay 1;

d2 $ fast 4 $ striate "<25 5 50 15>" $ s "apple:4" # gain 1.3;

d14 $ fast 4 $ ccv "1 0 1 0" # ccn "2" # s "midi";

once $ ccv "12" # ccn "3" # s "midi";

d10 $ ccv "1 0 1 0 1 0 1 0" # ccn "4" # s "midi";

} -- Striate

back1 = do {

d3 $ silence;

d15 $ silence;

d11 $ silence;

d13 $ silence;

d1 $ s "apple2:11 apple2:10"; -- voice note sound

d16 $ slow 2 $ ccv "<30 60> <45 75 15>" # ccn "2" # s "midi";

}

back2 = do {

d1 $ silence;

d4 $ silence;

d6 $ silence;

d12 $ silence;

d16 $ ccv "15 {40 70} 35 5" # ccn "2" # s "midi";

}

back3 = do {

xfadeIn 2 3 $ silence;

d16 $ ccv "30 ~ <15 {15 45}> ~" # ccn "2" # s "midi";

}

back4 = do{

once $ ccv "0" # ccn "3" # s "midi";

d11 $ qtrigger $ filterWhen (>=0) $ seqP [

(1, 2, s "apple3:1" # room 1 # gain 2),

(8, 9, s "apple:4" # room 3 # size 1)

];

xfadeIn 7 1 $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.6 # lpf 3500 # hpf 1000 # krush 3 # djf 1;

xfadeIn 2 1 $ silence;

xfadeIn 10 1 $ silence;

d5 $ silence;

d8 $ silence;

d9 $ silence;

}

d7 $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.6 # lpf 3500 # hpf 1000 # krush 3

d7 $ fadeOut 10 $ s "apple2:9 {apple2:13 apple2:13} apple2:0 apple2:3" # gain 1.6

drop_2

back1

back2

back3

back4

panic

-- Macintosh

queOnda

panic

once $ s "apple3:1" # begin 0.04 # gain 1.2

d1 $ slow 2 $ "apple:4 {~ apple:7 apple:7 apple:8} {apple:9 apple:9} {apple:4 ~ ~ apple2:9}" # cut 1 # note "c5 g4 f5 b5"

d12 $ fast 1 $ s "{~ hh ~ ~}{hh ~}{~ hh ~ hh}{hh hh}" # gain 1.3

d15 $ s "hh:7*4"

d16 $ degradeBy 0.2 $ s "hh:3*8" # gain 1.4

d1 $ silence

d1 $ slow 2 $ "apple:4 {~ apple:7 apple:7 apple:8} {apple:9 apple:9} {apple:4 ~ ~ apple2:9}" # cut 1 # note "[c5 g4 f5 b5]"

d1 $ slow 2 $ "apple:4 {~ apple:7 apple:7 apple:8} {apple:9 apple:9} {apple:4 ~ ~ apple2:9}" # cut 1 # note "[c5 e5 a5 c5]"

d2 $ s "techno*4"

d12 $ fast 1 $ s "{~ hh}{~ hh}{~ ~ hh hh}{ ~ hh}" # gain 1.3

d1 $ slow 2 $ "apple:4 {~ apple:7 apple:7 apple:8} {apple:9 apple:9} {apple:4 ~ ~ apple2:9}" # cut 1 # note "c5 g4 f5 b5" # speed 2

hush

d12 $ s "apple:4*4" # cut 1

d12 $ hush

techno_drums

drop_2 = do

d12 $ fast 1 $ s "{~ hh}{~ hh}{~ ~ hh hh}{ ~ hh}" # gain 1.3

d13 $ fast 1 $ s "{techno:1 ~ ~ ~}{techno:1 ~ ~ ~}{techno:1 techno:3 ~ techno:1}{~ techno:4 techno:4 ~} " # gain 1.4

d2 $ fast 4 $ striate "<7 30>" $ s "apple:4*1" # gain 1.3 -- Striate

drop_2

hush

-- MIDI

-- bassdm ~ <{bassdm bassdm} {bassdm bassdm bassdm}> bassdm

d14 $ ccv "1 0 ~ <{1 0 1 0} {1 0 1 0 1 0}> 1 0" # ccn "2" # s "midi"

d15 $ ccv "120 30 110 40" # ccn "1" # s "midi"

d14 $ fast 2 $ ccv "0 1 0 1" # ccn "2" # s "midi"

d13 $ fast 1 $ ccv "0 10 127 13" # ccn "6" # s "midi"

d16 $ fast 2 $ ccv "127 {30 70} 60 110" # ccn "0" # s "midi"

--d16 $ fast 2 $ ccv "0 0 0 0" # ccn "3" # s "midi"

-- test midi channel 4

d1 $ s " ~ ~ bd <~ bd>"

d16 $ ccv "0 1" # ccn "4" # s "midi"

-- choose timestamp in video example

-- https://www.flok.livecoding.nyuadim.com:3000/s/frequent-tomato-frog-61217bfc

--d8 $ s "[[808bd:1] feel:4, <feel:1*16 [feel:1!7 [feel:1*6]]>]" # room 0.4 # krush 15 # speed (slow "<2 3>" (range 4 0.5 saw))

Visuals: It was very important for us that our visuals matched the clean aesthetic of apple, and the cute and dancy aesthetic of our concept. We worked very hard on making sure that our elements aligned well with each other. In the end, we have three main visuals in the piece:

- A video of tabs being open referencing multiple I.M classes

- The Apple Logo in a white screen – with glitch lines during the shut down

- An immitation of their iconic purple mountain wallpaper

We modify all of them accordingly so our composition feels cohesive. In order to build them we used P5.js (latter two) and Hydra. Here is the code we built:

function logo() {

let p5 = new P5()

s1.init({src: p5.canvas})

src(s1).out(o0)

p5.hide();

p5.background(255, 255, 255);

let appleLogo = p5.loadImage('https://i.imgur.com/UqV7ayC.png');

p5.draw = ()=>{

p5.image(appleLogo, (width - 400) / 2, (height - 500) / 2, 400, 500);

}

}

function visualsOne() {

src(o1).out()

s0.initVideo('https://upload.wikimedia.org/wikipedia/commons/b/bb/Screen_record_2024-04-30_at_5.54.36_PM.webm')

src(s0).out(o0)

render(o0)

}

function visualsTwo() {

src(s0)

.hue(() => 0.2 * time)

.out(o0)

}

function visualsThree() {

src(s0)

.hue(() => 0.2 * time + cc[2])

.rotate(0.2)

.modulateRotate(osc(3), 0.1)

.out(o0)

}

function visualsFour() {

src(s0)

.invert(()=>cc[3])

.rotate(0.2)

.modulateRotate(osc(3), 0.1)

.color(0.8, 0.2, 0.5)

.scale(() => Math.sin(time) * 0.1 + 1)

.out(o0)

}

function visualsFive() {

src(s0)

.rotate(0.2)

.modulateRotate(osc(3), 0.1)

.color(0.8, 0.2, 0.5)

.scale(()=>cc[1]*3)

.out(o0)

}

function oops() {

src(s0)

.rotate(0.2)

.modulateRotate(osc(3), 0.1)

.color(0.8, 0.2, 0.5)

.scale(()=>cc[1]*0.3)

.scrollY(3,()=>cc[0]*0.03)

.out(o0)

}

function shutdown() {

osc(4,0.4)

.thresh(0.9,0)

.modulate(src(s2)

.sub(gradient()),1)

.out(o1)

src(o0)

.saturate(1.1)

.modulate(osc(6,0,1.5)

.brightness(-0.5)

.modulate(

noise(cc[1]*5)

.sub(gradient()),1),0.01)

.layer(src(s2)

.mask(o1))

.scale(1.01)

.out(o0)

}

function glitchLogo() {

let p5 = new P5()

s1.init({src: p5.canvas})

src(s1).out()

p5.hide();

p5.background(255, 255, 255, 120);

p5.strokeWeight(0);

p5.stroke(0);

let prevCC = -1

let appleLogo = p5.loadImage('https://i.imgur.com/UqV7ayC.png');

p5. draw = ()=>{

p5.image(appleLogo, (width - 400) / 2, (height - 500) / 2, 400, 500);

let x = p5.random(width);

let length = p5.random(100, 500);

let depth = p5.random(1,3);

let y = p5.random(height);

p5.fill(0);

let ccActual = (cc[4] * 128) - 1;

if (prevCC !== ccActual) {

prevCC = ccActual;

} else { // do nothing if cc value is the same

return

}

if (ccActual > 0) { // only draw when ccActual > 0

p5.rect(x, y, length, depth);

}

}

}

//function macintosh() {

// osc(2).out()

//}

function flashlight() {

src(o1)

.mult(osc(2, -3, 2)) //blend is better or add

//.add(noise(2))//

//.sub(noise([0, 2]))

.out(o2)

src(o2).out(o0)

}

function wallpaper() {

s2.initImage("https://blog.livecoding.nyuadim.com/wp-content/uploads/appleWallpaper-scaled.jpg");

let p5 = new P5();

s1.init({src: p5.canvas});

src(s1).out(o1);

//src(o1).out(o0);

src(s2).layer(src(s1)).out();

p5.hide();

p5.noStroke();

p5.background(255, 255, 255, 0); //transparent background

p5.createCanvas(p5.windowWidth, p5.windowHeight);

let prevCC = -1;

let colors = [

p5.color(255, 198, 255, 135),

p5.color(233, 158, 255, 135),

p5.color(188, 95, 211, 135),

p5.color(142, 45, 226, 135),

p5.color(74, 20, 140, 125)

];

p5.draw = () => {

let ccActual = (cc[4] * 128) - 1;

if (prevCC !== ccActual) {

prevCC = ccActual;

} else { // do nothing if cc value is the same

return

}

if (ccActual <= 0) { // only draw when ccActual > 0

return;

}

p5.clear(); // Clear the canvas each time we draw

// Draw the right waves

for (let i = 0; i < colors.length; i++) {

p5.fill(colors[i]);

p5.noStroke();

// Define the peak points manually

let peaks = [

{x: width * 0.575, y: height * 0.9 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.6125, y: height * 0.74 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.675, y: height * 0.54 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.75, y: height * 0.7 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.8125, y: height * 0.4 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.8625, y: height * 0.5 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.9, y: height * 0.2 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.95, y: height * 0 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width, y: height * 0 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width, y: height * 0.18 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))}

];

// Draw the shape using curveVertex for smooth curves

p5.beginShape();

p5.vertex(width * 0.55, height);

// Use the first and last points as control points for a smoother curve at the start and end

p5.curveVertex(peaks[0].x, peaks[0].y);

// Draw the curves through the peaks

for (let peak of peaks) {

p5.curveVertex(peak.x, peak.y);

}

// Use the last point again for a smooth ending curve

p5.curveVertex(peaks[peaks.length - 1].x, peaks[peaks.length - 1].y);

p5.vertex(width * 1.35, height + 500); // End at bottom right

p5.endShape(p5.CLOSE);

}

// Draw the left waves

for (let i = 0; i < colors.length; i++) {

p5.fill(colors[i]);

p5.noStroke();

// Define the peak points relative to the canvas size

let peaks = [

{x: 0, y: height * 0.1 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.1 + p5.random(width * 0.025), y: height * 0.18 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.1875 + p5.random(width * 0.025), y: height * 0.36 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.3125 + p5.random(width * 0.025), y: height * 0.26 + p5.random((i - 1) * (height * 0.14), i * (height * 0.14))},

{x: width * 0.5 + p5.random(width * 0.025), y: height * 0.5 + p5.random((i - 1) * (height * 0.12), i * (height * 0.12))},

{x: width * 0.75, y: height * 1.2}

];

// Draw the shape using curveVertex for smooth curves

p5.beginShape();

p5.vertex(0, height); // Start at bottom left

// Use the first and last points as control points for a smoother curve at the start and end

p5.curveVertex(peaks[0].x, peaks[0].y);

// Draw the curves through the peaks

for (let peak of peaks) {

p5.curveVertex(peak.x, peak.y);

}

// Use the last point again for a smooth ending curve

p5.curveVertex(peaks[peaks.length - 1].x, peaks[peaks.length - 1].y);

p5.vertex(width * 0.75, height * 2); // End at bottom right

p5.endShape(p5.CLOSE);

}

};

}

function buildup() {

src(s2).layer(src(s1)).invert(()=>cc[6]).out();

}

function flashlight() {

src(o1)

.mult(osc(2, -3, 2)) //blend is better or add

//.add(noise(2))//

//.sub(noise([0, 2]))

.out()

}

var visuals = [

() => logo(),

() => visualsOne(),

() => visualsTwo(), // 2

() => visualsThree(),

() => visualsFour(), // 4

() => visualsFive(),

() => oops(), // 6

() => shutdown(),

() => glitchLogo(), // 8

() => macintosh(),

() => wallpaper(), // 10

() => buildup(),

() => flashlight() // 12

]

src(s0)

.layer(src(s1))

.out()

var whichVisual = -1

update = () => {

ccActual = cc[3] * 128 - 1

if (whichVisual != ccActual) {

if (ccActual >= 0) {

whichVisual = ccActual;

visuals[whichVisual]();

}

}

}

render(o0)

// cc[2] controls colors/invert

// cc[3] controls which visual to trigger

// cc[4] controls when to trigger p5.js draw function

hush()

let p5 = new P5()

s1.init({src: p5.canvas})

src(s1).out()

p5.hide();

p5.background(255, 255, 255, 120);

p5.strokeWeight(0);

p5.stroke(0);

let appleLogo = p5.loadImage('https://i.imgur.com/UqV7ayC.png');

function setupMidi() {

// Open Web MIDI Access

if (navigator.requestMIDIAccess) {

navigator.requestMIDIAccess().then(onMIDISuccess, onMIDIFailure);

} else {

console.error('Web MIDI API is not supported in this browser.');

}

function onMIDISuccess(midiAccess) {

let inputs = midiAccess.inputs;

inputs.forEach((input) => {

input.onmidimessage = handleMIDIMessage;

});

}

function onMIDIFailure() {

console.error('Could not access your MIDI devices.');

}

// Handle incoming MIDI messages

function handleMIDIMessage(message) {

const [status, ccNumber, ccValue] = message.data;

console.log(message.data)

if (status === 176 && ccNumber === 4) { // MIDI CC Channel 4

prevCC = midiCCValue;

midiCCValue = ccValue;

if (midiCCValue === 1) {

prevCC = midiCCValue;

p5.redraw();

}

}

}

}

p5. draw = ()=>{

p5.image(appleLogo, (width - 400) / 2, (height - 500) / 2, 400, 500);

let x = p5.random(width);

let length = p5.random(100, 500);

let depth = p5.random(1,3);

let y = p5.random(height);

p5.fill (0);

p5.rect(x, y, length, depth); // here I'd like to trigger this function via midi 4

}

p5.noLoop()

setupMidi()

hush()

Contribution: Our team met regularly and had constant communication through Whatsapp. Initially Maya and Raya focused on building the visuals and Jun and Juanma focused on building the audio. Nevertheless, progress happened mostly during meetings where we would all come up with ideas and provide immediate feedback. For example, it was Juanma’s idea to do recreate their wallpaper.

Once we had a draft, the roles blurred a lot. Jun worked with Maya on incorporating MIDI values into the P5.js sketches, and with Juanma on organizing the visuals into an array so they could be triggered through Tidal functions. Raya worked on the video visuals. Juanma focused on the latter part of the sound and in writing the Tidal functions, while Jun focused on the earlier part and cleaning up the code. Overall we are very proud of our debut as a Live Coding band! We worked very well together, and feel that we constructed a product where our own voices can be heard. A product that is also fun. Hopefully you all dance! 🕺🏼💃🏼