Hi everyone!

This is the documentation for my Composition Performance (linked is a recorded draft — the actual piece to be performed with minor improvements in class tomorrow!)

Intro

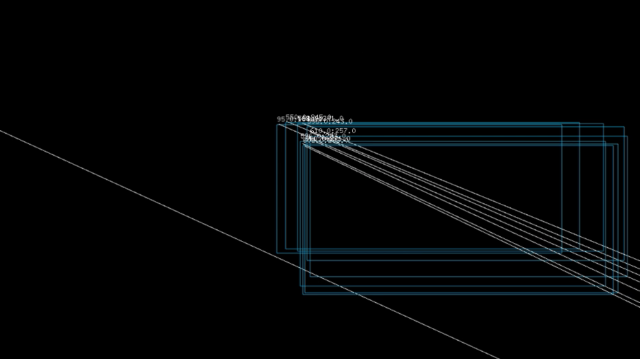

The theme of my performance is visualizing and sound-narrating a gameplay experience. My intention was to depict the energy, intensity, and old-fashioned vibe of Mario-like 2D games. This is done through heavy use of pixelated graphics, noise, vibrant colors in Hydra, and electronic sounds/chords (mostly from the ‘arpy’ library) as well as some self-recorded samples in Tydal Cycles.

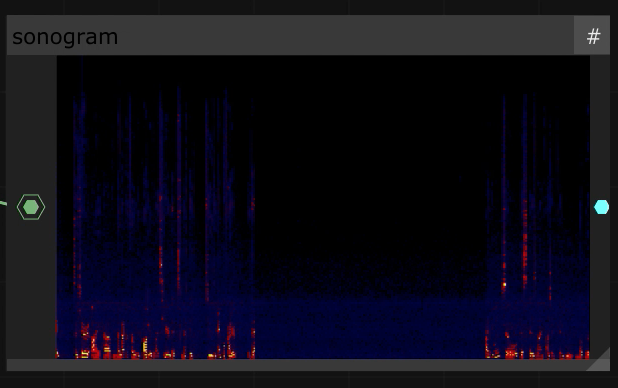

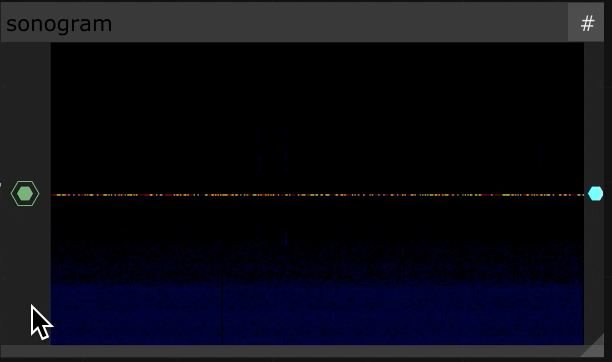

Audio

The overall intention behind the audio in the piece is to take the audience from the start of the game, followed by some progression with earning score points, then culminating and progressing to level 2, and lastly to “losing” the game and the piece ending.

The audio elements that I recorded are the ones saying “level two”, “danger!”, “game over!”, “score”, and “welcome to the game!”:

-- level 2

d1 $ fast 0.5 $ s "amina:2" # room 1

-- danger

d1 $ fast 0.5 $ s "amina:0" # room 1

-- game over

d1 $ fast 0.5 $ s "amina:1" # room 1

-- score

d1 $ fast 0.5 $ s "amina:3" # room 1

-- welcome to the game

d1 $ fast 0.5 $ s "amina:4" # room 1

Learning from class examples of musical composition with chords, rhythms, and beat drops, I experimented with different notes, musical elements, and distortions. My piece starts off with a distorted welcome message that I recorded and edited:

d1 $ every 2 ("<0.25 0.125 0.5>" <~) $ s "amina:4"

# squiz "<1 2.5 2>"

# room (slow 4 $ range 0 0.2 saw)

# gain 1.3

# sz 0.5

# orbit 1.9

Building upon various game-like sounds, I create different compositions throughout the performance. For example:

-- some sort of electronic noisy beginning

-- i want to add "welcome to the game"

d1 $ fast 0.5 $ s "superhoover" >| note (arp "updown" (scale "minor" ("<5,2,4,6>"+"[0 0 2 5]") + "f4")) # room 0.3 # gain 0.8 -- change to 0.8

-- narration of a game without a game

-- builds up slightly

d3 $ stack [

s "sine" >| note (scale "major" ("[<2,3>,0,2](3,8)") + "g5") # room 0.4,

fast 2 $ s "hh*2 hh*2 hh*2 <hh*6 [hh*2]!3>" # room 0.4 # gain (range 1 1.2 rand)

]

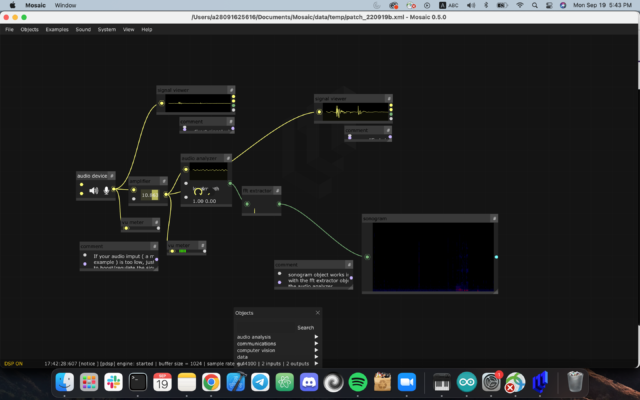

Visuals

My visuals rely on the midi output generated in Tydal Cycle and build upon each other. What starts as a black-and-white modulated and pixelated Voronoi noise grows gets color and grows into playful animations throughout.

For example:

voronoi(10,1,5).brightness(()=>Math.random()*0.15)

.modulatePixelate(noise(()=>cc[1]+20),()=>cc[0]),100)

.color(()=>cc[0],2.5,3.4).contrast(1.4)

.out(o0)

// colored

voronoi(10,1,5).brightness(()=>Math.random()*0.15)

.modulatePixelate(noise(25,()=>cc[0]),100)

.color(()=>cc[0],2.5,3.4).contrast(1.4)

.out(o0)

voronoi(10,1,5).brightness(()=>Math.random()*0.15)

.modulatePixelate(noise(()=>cc[1]+20,()=>cc[0]),100)

.color(()=>cc[0],()=>cc[1]-1.5,3.4).contrast(0.4)

.out(o0)

// when adding score

voronoi(10,1,5).brightness(()=>Math.random()*0.15)

.modulatePixelate(noise(()=>cc[2]*20,()=>cc[0]),100)

.color(()=>cc[0],5,3.4).contrast(1.4)

.add(shape(7),[cc[0],cc[1]*0.25,0.5,0.75,1])

.out(o0)

// when dropping the beat

voronoi(10,1,5).brightness(()=>Math.random()*0.15)

.modulatePixelate(noise(cc[0]+cc[1],0.5),100)

.color(()=>cc[0],0.5,0.4).contrast(1.4)

.out(o0)

Challenges

When curating the whole experience, I first started by creating the visuals. When I found something that I liked (distorted Voronoi noise), my intention was to add pixel-like sound, and the idea of games came up. This is how I decided to record audio and connect it to the visuals. What I did not anticipate is how challenging it would be to link transitions on both sides and timing them appropriately.

Final reflections and future improvements

I put a lot of effort into making the sounds cohesive and creating a story that I wanted to tell with the composition. When doing the next assignment in class, I think I will try to make the transitions smoother and make visuals be more powerful in the overall storytelling.

Most importantly, I think I am finally starting to get the hang of live coding. This is my first fully “intentional” performance — although many elements will be done with improv when performing live, this project feels less chaotic in terms of execution and the thought process put behind the experience.

The full code can be found here.