Devine Lu Linvega currently lives on a sailboat, and can be found lurking in discord chat rooms hinting at possible bugs and easter eggs in his work. He also created ORCA, along with its companion programs (such as the synthesiser Pilot which we will be using ) under the design collective 100rabbits aboard Devine’s sailboat.

What is ORCA?

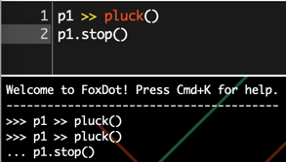

Orca is a visual programming environment for making music. Except, there are no words, loops or long lines of code. The IDE is simply a grid composed of placeholder ‘*’ characters. The program does not produce sound on its own, and instead produces MIDI events, OSC connections and UDP packets to communicate what sound should be played. I use Pilot, which is a synthesizer that Devines’ team created to produce my sounds. Even then, I could not send MIDI events to pilot. However, I could send them to superCollider. This approach did not work as Supercollider began picking up some immutable background noise through the same port. Hence I used UDP packets with the “;” operator to create sounds.

How does ORCA work?

The interaction of code and computer flows through “bangs”, a concept borrowed from Max/MSP and Pure Data. live programming concepts of immediate execution. These bangs differ from traditional programming languages like C which have complex blocks of code.

The bangs are maintained by frames which are discrete time steps. Each frame represents one “tick” of the sequencer’s clock (its rate is determined by the BPM). Every frame, the grid is updated and evaluated. Uppercase operators in ORCA execute automatically on every frame, ensuring a continuous, rhythmic flow of actions.

ORCAs IDE was built on inspiration from early 2000s games like Dwarf Fortress.

Operators

There are only 26 alphabetical operators. They go from A-Z and have 8 special operators. Some familiar operators to what we have seen in Tidalcycles are:

C clock(rate mod): Outputs modulo of frame.

D delay(rate mod): Bangs on modulo of frame.

U uclid(step max): Bangs on Euclidean rhythm.

: midi(ch oct note velocity*): Send a midi note.

; pitch(oct note): Send pitch byte out. (Sends this as a UDP message to the synthsizer)

Also interestingly, operators N, S, E, W, which represent the directions can be used to send bangs in their respective directions. Devine shows that this works lille sending marbles to collide with an object.

The above operators produce bangs, combined with other functions such as add and variables, we can make these bangs come in contact with events that we created. An example MIDI event is “:94g..”

The tools roots are from a misunderstanding about tidal that Devine had. ORCA tries to give an accessible way to do generative programming without a large programming background. The tool in a way gamifies the experience of making music in contrast to coding, making music. As ORCA does not compile and run in to errors, live coders experiment will not face syntax errors or compilation errors while editing the code.

However, the packaging of ORCA may seem inaccessible to some, the browser based package requires webMidi to be installed and configured and the locally run version that runs on node requires building on the terminal. Furthermore ORCA is a very “cool” tool to use in front of an audience as the interface between the computer and programmer transcends that of traditional programming languages. From a performance perspective, Orca can also connect to Unity, enabling interaction with 3D graphics. ORCA represents a paradigm shift in live coding by proving that constraints breed creativity. As Devine Lu Linvega states: “It’s for children. The documentation fits in a tweet”, yet professionals use it for everything from IDM sets to interactive installations. ORCA is both entry point and advanced tool in the ecosystem of computational art.

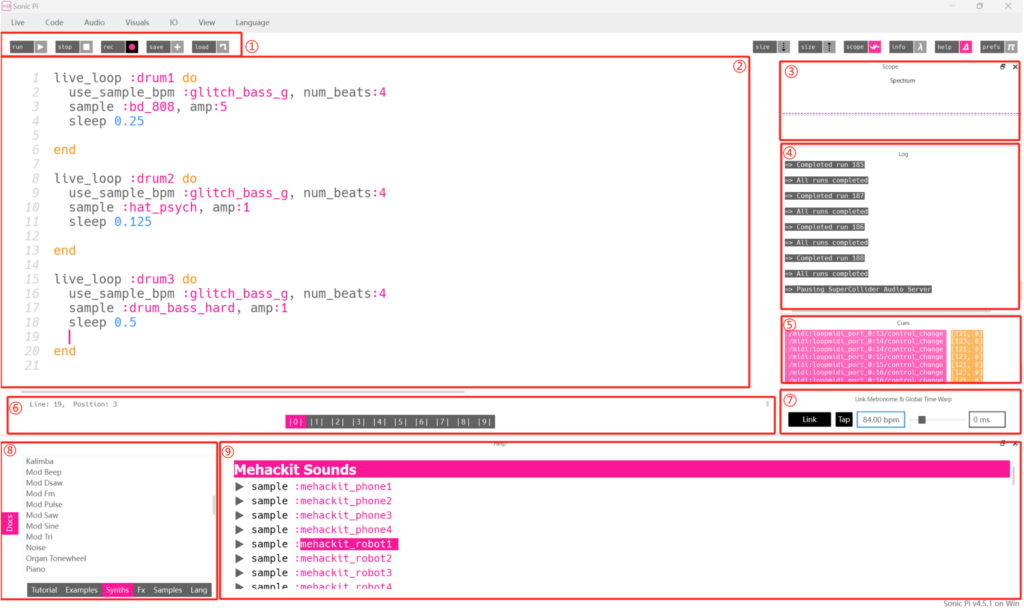

Below is a demo of ORCA in action.

Many ORCA users are technically proficient, employing the tool for solo experimentation with synths or DAWs, yet the platform thrives on community involvement and input. An engaged live coding community collaborates on Discord and Reddit, sharing techniques and directly interacting with creator Devine Lu Linvega, who actively participates in discussions and guides the ecosystem’s evolution through hints and open dialogue.