Process

Our process began with a clear vision of the environment we wanted to create, an abstract narrative that subtly tells the story of a group of friends watching TV and embarking on a surreal, psychedelic trip. While we didn’t want to portray this explicitly, the goal was to evoke the strange sensations and shifting experiences they go through, using a mix of visual cues and atmospheric design. With the concept in place, we knew that the project would need creative and unusual visuals right from the start, paired with immersive psytrance or liquid drum and bass audio to match the tone and energy of the story.

Once we had a solid sense of the visual and sonic direction, we dedicated ourselves to an intense 12 hour live coding jam session (with a couple of runs to the Baqala for snacks), which we streamed on Instagram. This session became a space of spontaneous experimentation and rapid development, where we started shaping the core of the experience. Although we made significant progress during the jam, the following days, especially after Tuesday, revealed some lingering technical issues and problems with timing that still needed to be resolved. These challenges became the focus of our attention as we worked toward polishing the final piece.

Audio

Aadil and I mostly handled the audio. Here we focused on using some effective voice samples to bring out parts of the performance we thought needed more attention. We just used samples from our favourite songs (e.g., Everything In Its Right Place by Radiohead) and favourite genres (Techno, Hardgroove). We had a buildup using layers of ambient textures and chopped samples with increasing intensity to simulate anticipation. Specifically, we manipulated legato ambience, gradually intensified techno patterns, and used MIDI CC values to sync modulation effects like low pass filters and crush.

In the midsections, we used heavy 808 kicks, distorted jungle breaks, and glitchy acid lines (like the “303” patterns) to keep up the tension and energy. For the end sections, we wanted to have some big piano synths that brought home the feeling of a comedown. The tonal shift was meant to echo that feeling of emotional release, what it feels like when a trip starts to settle.

Visuals:

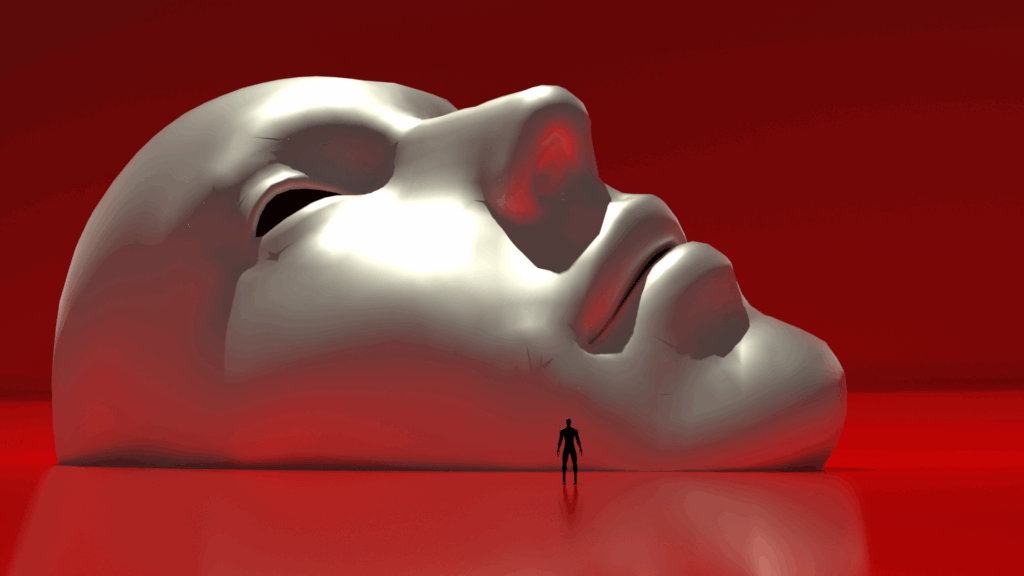

The goal was to mirror the full arc of a trip, with visuals locked to every change in the track. Mo Seif first sketched a concept for each moment, then built the look layer‑by‑layer, checking each draft against the audio until they matched perfectly. We had seven primary sections, each tied to a distinct musical cue.

- Intro – “Sofa & Tabs”

Us slouched on a couch, half‑watching TV. They decide to take a journey of their lifetimes; the trip timer starts.

2. Onset – “TV Gets Wavy”

The first tingle hits. The TV image begins to undulate – colors drifting, lines bending. A slow warp effect hints that reality is about to buckle.

3. First Peak – “Nixon + Rectangles”

Audio: vintage Nixon sample followed by drum drop.

Visuals: explosion of rectangle‑shaped, ultra‑psychedelic patterns that sync to each snare hit. The crowd pops; everything feels bigger, faster, weirder.

4. Chiller Section

A short breather featuring three “curated” GIFs:

Lo Siento, Wilson – pure goofy laughter.

Sassy the Sasquatch – laughter + tripping out

Pikachu tripping – those paranoid, deep‑thought vibes.

Together they nail the mood‑swings of a trip.

5. Meta Moment – “Pikachu Breaks the 4th Wall”

Pikachu dissolves into a live shot of the same GIF playing on my laptop in the dorm while we’re editing. Filming ourselves finishing the piece made it hilariously meta; syncing it to the beat was a nightmare, but it clicked.

6. Street‑Fighter Segment – “Choose Your Fighter”

Inspiration: I was playing GTA once back and saw myself in the game as Trevor. So we wanted to recreate that feeling and put us in the video game.

Build: we took 4‑5 photos of each of us, turned them into looping GIFs, and dropped them onto the classic character‑select screen with p5.js.

Plot Twist: Mo’s fighter “dies” (tongue out), smash‑cut to an Attack on Titan GIF – like he resurrected.

7. Final Drop & Comedown – “Hard‑Groove + CC Sync”

The last drop pivots to a hard‑groove techno feel. Every strobe and colour hit is driven by MIDI CC values mapped to the track. We fade back to the original couch shot: the three of us staring straight into the lens, coming down – sweaty, wired, grinning.

Here are the gifs we made for the street fighter visuals:

Here is the code we used:

Tidal:

--Lets watch some tv + mini build up + mini drop (SEC 1hey guys why dont we watch some tv

d1 $ chop 2 $ loopAt 16 $ s "ambience" # legato 3 # gain 1 #lpf (range 200 400 sine)

once $ "our:3" # up "-5"

d10 $ fast 2 $ "our:4" # up "-6"

d2 $ slow 1 $ s "techno2:2*4" # gain 0.9 # room 0.1

--cc (eval separately)

d16 $ fast 16 $ ccv "<0 100 80 20 0 100 80 20>" # ccn "2" # s "midi"

d4 $ ghost $ slow 2 $ s "rm*16" # gain 0.75 # crush 2 # lpf 2500 # lpq(range 0.4 0.6 sine)

d5 $ stack [

n "0 ~ 0 ~ 0 ~ 0 ~" # "house",

n "11 ~ 11 ~ 11 ~ 11 ~" # s "808bd" # speed 1 # squiz 0 # nudge 0.01 # release 0.4 # gain 0.3,

slow 1 $ n "8 ~ 8 8 ~ 8 ~ 8" # s "jungle"

]

d6

$ linger 1

$ n "[d3@2 d3 _ d3 _ d3 _ _ c3 _]/1"

-- $ n "[d3 d3 c3 d3 d3 d3 c3 d3 f3 _ _ f3 _ _ c3]/2"

-- $ n "[f3 _ _ g3 _ _ g3 _]*2"

# s "supergong" # gain 1.2 #lpf 100 # lpq 0.5 # attack 0.04 # hold 2 # release 0.1

d7 $ stack [randslice 8 $ loopAt 8 $ slow 2 $ jux (rev) $ off 0.125 (|+| n "<12 7 5>") $ off 0.0625 (|+| n "<5 3>") $ cat [

n "0 0 0 0",

n "5 5 5 5",

n "4 4 4 4",

n "1 1 1 1"

]] # s "303" # gain 0.9 # legato 1 # cut 2 # krush 2

d10 $ fast 2 $ ccn "0*128" # ccv (range 200 400 $ sine) # s "midi"

--at the end of first visual

d1 silence

d2 silence

d5 silence

d6 silence

d7 silence

-- drugs r enemy (before the drop)

once $ s "sample:2" # gain 1.2

-- THE drums (the drop)

d11 $ stack [fast 2 $ s "[bd*2, hh*4, ~ cp]"] # gain 1.2

--after drums

d9 $ stack [

slow 1 $ s "techno2:2*4" # gain 0.9 # room 0.1,

stack [

n "0 ~ 0 ~ 0 ~ 0 ~" # "house",

n "11 ~ 11 ~ 11 ~ 11 ~" # s "808bd" # speed 1 # squiz 0 # nudge 0.01 # release 0.4 # gain 0.3,

slow 1 $ n "8 ~ 8 8 ~ 8 ~ 8" # s "jungle"

],

linger 1

$ n "[d3@2 d3 _ d3 _ d3 _ _ c3 _]/1"

-- $ n "[d3 d3 c3 d3 d3 d3 c3 d3 f3 _ _ f3 _ _ c3]/2"

-- $ n "[f3 _ _ g3 _ _ g3 _]*2"

# s "supertron" # gain 0.8 #lpf 100 # lpq 0.5 # attack 0.04 # hold 2 # release 0.1 ,

stack [randslice 8 $ loopAt 8 $ slow 2 $ jux (rev) $ off 0.125 (|+| n "<12 7 5>") $ off 0.0625 (|+| n "<5 3>") $ cat [

n "0 0 0 0",

n "5 5 5 5",

n "4 4 4 4",

n "1 1 1 1"

]] # s "303" # gain 0.9 # legato 1 # cut 2 # krush 2,

fast 16 $ ccv "<0 100 80 20 0 100 80 20>" # ccn "2" # s "midi"

] # gain 0

d11 silence

d9 silence

-- START XFADE WHEN READY FOR GIF MUSIC

d10

$ whenmod 16 4 (|+| 3)

$ jux (rev . (# s "arpy") . chunk 4 (iter 4))

$ off 0.125 (|+| 12)

$ off 0.25 (|+| 7)

$ n "[d1(3,8) f1(3,8) e1(3,8,2) a1(3,8,2)]/2" # s "arpy"

# room 0.5 # size 0.6 # lpf (range 200 8000 $ slow 2 $ sine)

# resonance (range 0.03 0.6 $ slow 2.3 $ sine)

# pan (range 0.1 0.9 $ rand)

# gain 0.6

-- -->7

d16 $ fast 16 $ ccv "<0 100 80 20 0 100 80 20>" # ccn "2" # s "midi"

-- GIF section Sudden drop from the mini drop, chill background matching music + sample audios for gifs (SEC 2.1)

-- GIF SECTION MUSIC

-- 1) DONNY

-- 2) WILSONNNNNN

once $ s "wilson" # gain 1.4

-- 3) PIKAPIKA

once $ s "pikapika" # gain 1.4

--backgroung silence WHEN ICE SPICE

d10 silence -- aadil

-- Ice Spice Queen (SEC 2.2)

once $ "our:5" #gain 2.5 --j

-- Start boss music LOUD, reverse drop off glitchy into us fighting (SEC 3)

d1 $ fast 2 $ s "techno2:2*4" # gain 1.2 # room 0.1

--SELECT UR FIGHTER CC VALUES

d10 $ fast 4 $ ccn "0*128" # ccv (range 200 400 $ sine) # s "midi"

-- d16 $ slow 1 $ ccn "0*128" # ccv (range 0.9 1.2 $ slow 2 $ rand) # s "midi"

-----------------------------------------------------------

--cc for street fight

--d16 $ fast 16 $ ccv "0 60 0 70" # ccn "0" # s "midi"

--

-- WHEN STREET FIGHT MO VS AADIL

d2 $ stack [

sometimesBy 0.25 (|*| up "<2 5>") $

sometimesBy 0.2 (|-| up "<2 1>")

$ jux (rev) $

n "[a4 b4 c4 a4]*4" # s "superhammond" # cut 4 # distort 0.3 # up "-9"

# lpf (range 200 7000 $ slow 2 $ sine) # resonance ( range 0.03 0.5 $ slow 3 $ cosine) # octave (choose [4, 5, 6, 3]),

sometimesBy 0.15 (degradeBy 0.125) $

s "reverbkick*16" # n (irand(8)) # distort 0 # speed (range 0.9 1.2 $ slow 2 $ rand) # gain 0.9

] # room 0.5 # size 0.5 # pan (range 0.2 0.8 $ slow 2 $ sine) #gain 0.9

d5 $ fast 2 $ (|+| n "12")$ slowcat [

n "0 ~ 0 2 5 ~ 4 ~",

n "2 ~ 0 2 ~ 4 7 ~",

n "0 ~ 0 2 5 ~ 4 ~",

n "2 ~ 0 2 ~ 4 7 ~",

n "12 11 0 2 5 ~ 4 ~",

n "2 ~ 0 2 ~ 4 7 ~",

n "0 ~ 0 2 5 ~ 4 ~",

n "2 ~ 0 2 ~ 4 ~ 2"

] # s "supertron" # release 0.7 # distort 10 # krush 10 # room 0.5 #hpf 8000 # gain 0.7

-- silence d2 when the next DO starts playing

d2 silence

-- Quiet as in im dead, mini build up, mini drop after lick (SEC 4)

do {

d5 $ qtrigger $ filterWhen (>=0) silence;

d4 $ qtrigger $ filterWhen (>=0) $ stack[

s "hammermood ~" # room 0.5 # gain 1.8 # up "8",

fast 2 $ s "jvbass*2 jvbass*2 jvbass*2 <jvbass*6 [jvbass*2]!3>" # krush 9 # room 0.7

] # speed (slow 4 (range 1 2 saw));

d3 $ qtrigger $ filterWhen (>=8) $ seqP [

(0, 1, s "808bd:2*4"),

(1,2, s "808bd:2*8"),

(2,3, s "808bd:2*16"),

(3,4, s "808bd:2*32")

] # room 0.3 # hpf (slow 4 (100*saw + 100)) # speed (fast 4 (range 1 2 saw)) # gain 0.8;

}

d1 $ "[reverbkick(3,8), jvbass(3,8)]" # room 0.5#krush 6 # up "-9" # gain 1

--

drop_deez = do

{

d5 $ qtrigger $ filterWhen (>=0) $ fast 4 $ chop 2 $ loopAt 8 $ s "drumz:1" # gain 1.8 # legato 3 # cut 3;

d6 $ qtrigger $ filterWhen (>=4) $ s "jvbass" # gain 1 # room 4.5;

d10 $ qtrigger $ filterWhen (>=6) $ loopAt 4 $ s "acapella" # legato 3 # gain (range 0.7 1.5 saw);

d7 $ qtrigger $ filterWhen (>=0) silence;

d8 $ qtrigger $ filterWhen (>=0) silence;

d2 $ qtrigger $ filterWhen (>=0) silence;

d3 $ qtrigger $ filterWhen (>=0) silence;

d4 $ qtrigger $ filterWhen (>=0) silence;

d9 $ qtrigger $ filterWhen (>=8) $ s "amencutup*16" # n (irand(8)) # speed "2 1" # gain 1.8 # up "-2"

}

d11 $ slow 1 $ ccn "0*128" # ccv (range 1 62 saw) # s "midi"

drop_deez

--after drop settles

do

d1 silence

d6 silence

-- Quieting down with the couches, drug bad sample (SEC 5)

do {

d2 $ qtrigger $ filterWhen (>=6) silence;

d6 $ qtrigger $ filterWhen (>=4) silence;

d9 $ qtrigger $ filterWhen (>=0) silence;

d10 $ qtrigger $ filterWhen (>=0) silence;

d5 $ qtrigger $ filterWhen (>=8) silence;

d1 $ sound "our:1" # cut 4 # gain 1.5;

d12 $ qtrigger $ filterWhen (>=0) $ slow 4.1 $ ccv "10 5 4 3" # ccn "0" # s "midi";

}

d1 silence

once $ s "drugsrbad" # gain 1.4

hushHere is the hydra code:

//SEC 1

s0.initImage("https://i.imgur.com/enIHg2L.jpeg"); //start scene

s1.initImage("https://i.imgur.com/SRNmgQR.jpeg"); //tv

src(s0).scale(.95).out()

// SYnced with Radiohead sample

inc=1;

startZoom = 1;

update = ()=>{

if(startZoom){

inc+=0.01;

}

if (inc >= 3.4)

{

inc = 3.4;

return; //fix this

}

console.log(inc);

}

//tv zoom

src(s1)

//.scale(()=>inc)

//.scale(3.4)

//.modulate(noise(()=>cc[2],1))

//.modulate(s1,()=>cc[2]*5)

//.colorama(()=>cc[2]*0.1)

//.modulateKaleid(osc(()=>cc[2]**2,()=>ccActual[2],20),0.01) //changed to 0.1 to 0.01

.out()

//first visual

src(o0)

.hue("tan(st.x+st.y)")

.colorama("pow(tan(st.x),tan(st.y))")

.posterize("sin(st.x)*10.0", "cos(st.y)*10.0")

.luma(()=>cc[2],()=>cc[2])

.modulatePixelate(src(o0)

.shift("cos(st.x)", "sin(st.y)")

.scale(1.01), () => Math.sin(time / 10) * 10, () => Math.cos(time / 10) * 10)

.layer(osc(1, [0, 2].reverse()

.smooth(1 / Math.PI)

.ease('easeInOutQuad')

.fit(1 / Math.E)

.offset(1 / 5)

.fast(1 / 6), 300)

.mask(shape(4, 0, 1)

.colorama([0, 1].ease(() => Math.tan(time / 10))

.fit(1 / 9)

.offset(1 / 8)

.fast(1 / 7))))

.blend(o0, [1 / 100, 1 - (1 / 100)].reverse()

.smooth(1 / 2)

.fit(1 / 3)

.offset(1 / 4)

.fast(1 / 5))

.out()

//SEC 2.1 (GIFS)

//gorilla

s2.initVideo("https://i.imgur.com/YMApoXd.mp4");

src(s2).out()

//wilson

s2.initVideo("https://i.imgur.com/lEVy8F2.mp4");

src(s2).luma(0.1).colorama(0.1).out()

//pikachu

s0.initVideo("https://i.imgur.com/TS10M9l.mp4"); //pikachu

src(s0).out()

//load next clips

s1.initImage("https://i.imgur.com/mW62iXz.jpeg"); //still pikachu

//still pikachu

src(s1).out()

//SEC 2.2 (QUEEN)

//gang

s2.initVideo("https://i.imgur.com/i7msTNY.mp4"); //GANG

src(s2)

.modulate(s0,0.05)

.colorama(0)

.out()

//SEC 3

//choose sf

s2.initImage("https://i.imgur.com/E4SPvyb.jpeg"); //choose your player

src(s2).colorama(()=>ccActual[0]*0.001).modulate(noise(0.2,()=>cc[0]*0.001)).out()

//gang street fighter

let sf1 = new P5();

s3.init({src: sf1.canvas})

sf1.out

sf1.hide()

let mo = sf1.loadImage("https://media0.giphy.com/media/v1.Y2lkPTc5MGI3NjExMDc2NGU0aGEwZmJwZXQ0ZWttZng5aGNqdWp6azlja2ZmeXBlMGs0aiZlcD12MV9pbnRlcm5hbF9naWZfYnlfaWQmY3Q9cw/3I1aZCTDfNCd0Fclr4/giphy.gif")

let aadil = sf1.loadImage("https://media.giphy.com/media/gQFjPwZm0pMqW8yoUY/giphy.gif")

let back = sf1.loadImage("https://blog.livecoding.nyuadim.com/wp-content/uploads/background.jpg")

mo.play();

aadil.play();

sf1.scale(0.9);

sf1.fill(255);

sf1.draw = ()=>{

//sf1.fill(255)

//et img = ba.get();

sf1.image(back, 0, 0, sf1.width, sf1.height);

//sf1.video(ba,0,0 sf1.width sf1.height);

// sf1.image(mo, 250, sf1.height - mo.height); // bottom-left

//sf1.image(aadil, sf1.width - aadil.width-100, sf1.height+5 - aadil.height); // bottom-right

//sf1.height/1.3 - mo.height

sf1.image(mo, sf1.width/6, sf1.height/1.3 - mo.height, sf1.height/1.5, sf1.width/2.5); // bottom-left

sf1.image(aadil, (sf1.width/1.5) - aadil.width, sf1.height/1.3- aadil.height, sf1.height/1.5, sf1.width/2.5); //

sf1.fill(255);

// uncomment below to sync the gif with tida

// mo.setFrame(Math.floor((()=>cc[0]*mo.numFrames())));

// aadil.setFrame(Math.floor((()=>cc[0]*mo.numFrames())));

}

src(s3).out()

//SEC 4

//load clips

s0.initImage("https://i.imgur.com/dPIOZnu.jpeg"); // mo dead

s1.initImage("https://i.imgur.com/Ukz54v3.png"); //mo really dead

//mo dead

src(s0).out()

//mo really dead

inc=1;

startZoom = 1;

update = ()=>{

if(startZoom){

inc+=0.005;

}

if (inc >= 2.1)

{

inc = 2.1;

return; //fix this

}

console.log(inc);

}

src(s1)

//.scale(()=>inc)

.out()

//lickkkk

s2.initVideo("https://i.imgur.com/hvNHjEg.mp4"); //lick

src(s2).scale(1.3)

//.diff(s2,0.005)

//.colorama(1)

//.modulate(s2,0.05)

.out()

//post lick

osc(()=>cc[1]*100, 0.003, 1.6)

.modulateScale(osc(cc[1]*10, 0.7

, 1.1)

.kaleid(2.7))

.repeat(cc[1]*3, cc[1]*3)

.modulate(o0, 0.05)

.modulateKaleid(shape(4.2, cc[1], 0.8))

//.add(o0,cc[1])

.out(o0);

//SEC 5

//couch

s3.initImage("https://i.imgur.com/dGW1rt5.png"); //couch

inc=1;

startZoom = 1;

update = ()=>{

if(startZoom){

inc+=0.01;

}

if (inc >= 9)

{

inc = 999.5;

return; //fix this

}

console.log(inc);

}

src(s3)

.repeat(()=>ccActual[0],()=>ccActual[0])

//.scale(()=>inc)

.out()Heres is a frame from our livestream:

I think at one point we had two drops and we just couldn’t proceed creatively from that point on. But we went back to all of our previous blog posts and realized that we could just think of something and really we already have the tools to do it.

Thank you Aaron for all the help and I think I speak for all three of us when I say that we really needed the fun we had in this class as graduating seniors.