Here’s the link to my presentation:

SuperCollider is an environment and programming language designed for real-time audio synthesis and algorithmic composition. It provides an extensive framework for sound exploration, music composition, and interactive performance.

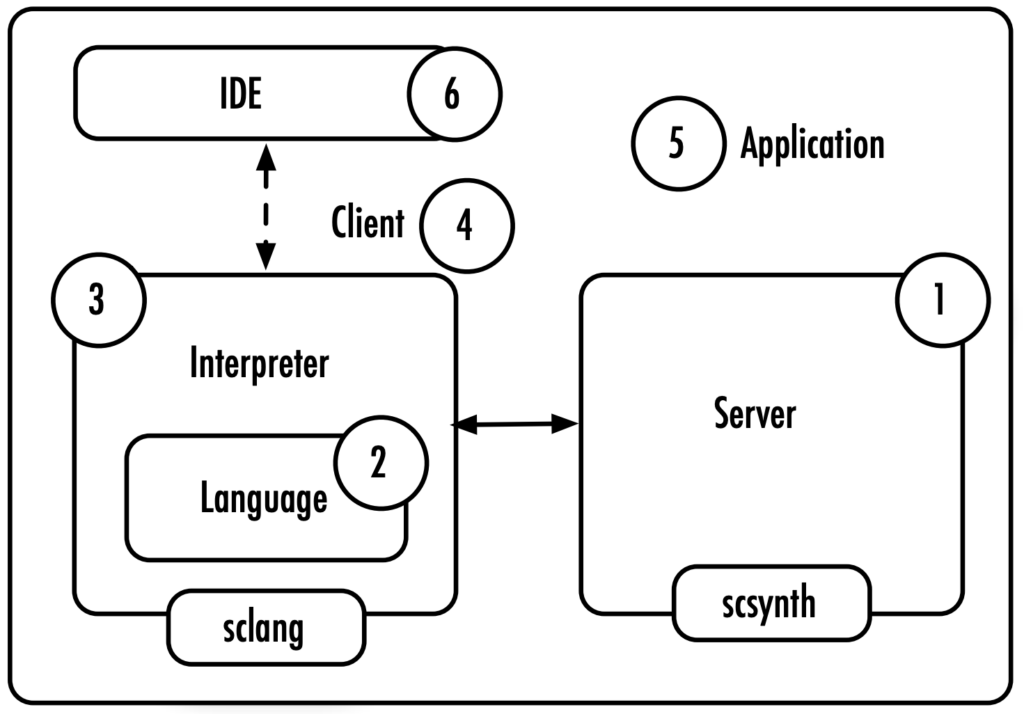

The application consists of three parts: the audio server (referred to as scsynth); the language interpreter (referred to as sclang) and which also acts as a client to scsynth; and the IDE(referred to as scide). The IDE is built-in and the server and the client (language interpreter) are two completely autonomous programs.

What is the Interpreter, and what is the Server?

SuperCollider is made of two distinct applications: the server and the language.

To summarize in easier words:

Everything you type in SuperCollider is in the SuperCollider language (the client): that’s where you write and execute commands, and see results in the Post window.

Everything that makes sound in SuperCollider is coming from the server—the “sound engine”— controlled by you through the SuperCollider language.

SuperCollider for real-time audio synthesis: SC is optimized for the synthesis of real-time audio signals. This makes it ideal for use in live performance, as well as, in sound installation/event contexts.

SuperCollider for algorithmic composition: One of the strengths of SC is to combine two, at the same time both complementary and antagonistic, approaches to audio synthesis. On one hand, it makes it possible to carry out low-level signal

processing operations. On the other hand, it does enable the composers to express themselves at higher level abstractions that are more relevant to the composition of music (e.g.: scales, rhythmical patterns, etc).

SuperCollider as a programming language: SC is also a programming language. It belongs to the broader family of “object-oriented” languages. SC is also a language interpreter for the SC programming language. It’s based on Smalltalk and C, and has a very strong set of Collection classes like Arrays.

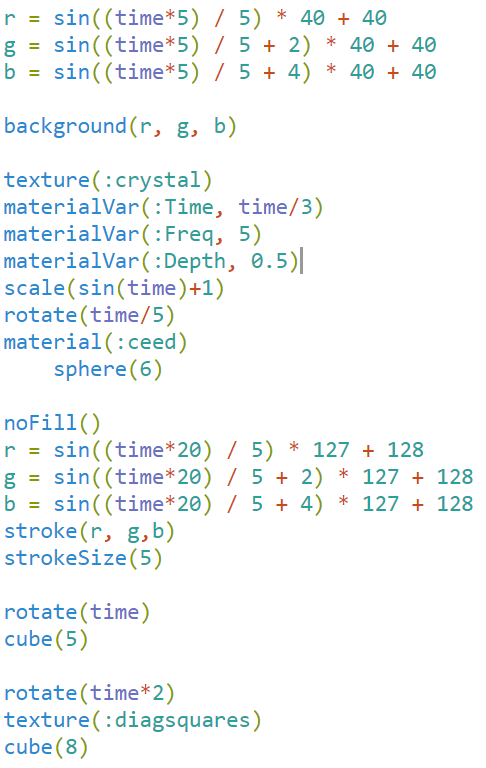

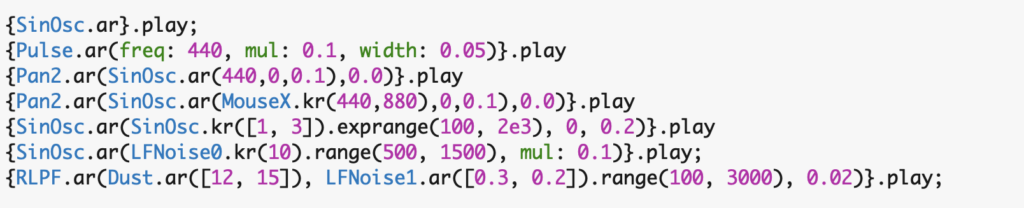

Some code snippets from the demo:

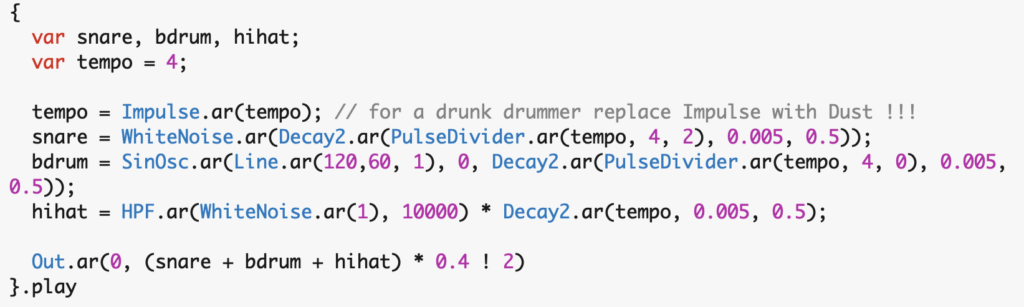

For drum roll sounds:

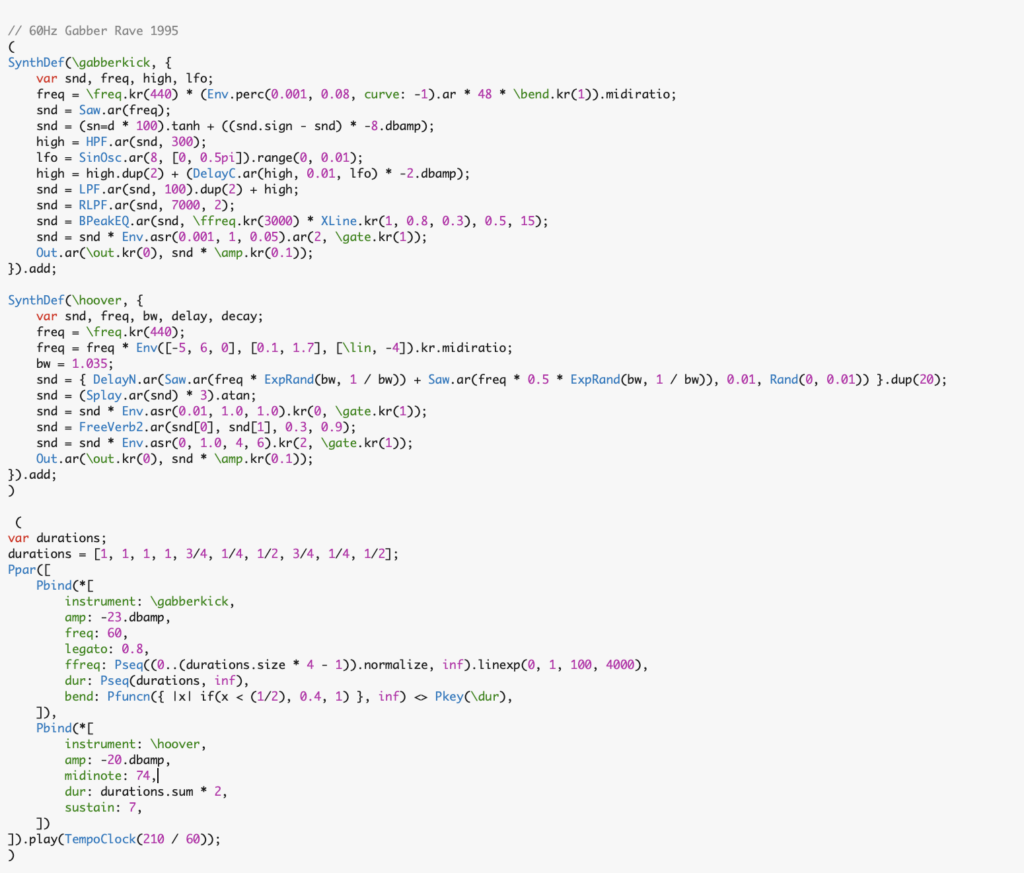

An interesting example of 60Hz Gabber Rave 1995 that I took from the internet:

Here’s a recording of some small sound clips made with SuperCollider (shown in class):

https://drive.google.com/file/d/1I_HxymG_OLzdw_rirGJ9iYXLxzyK8n_k/view?usp=drive_link