Description

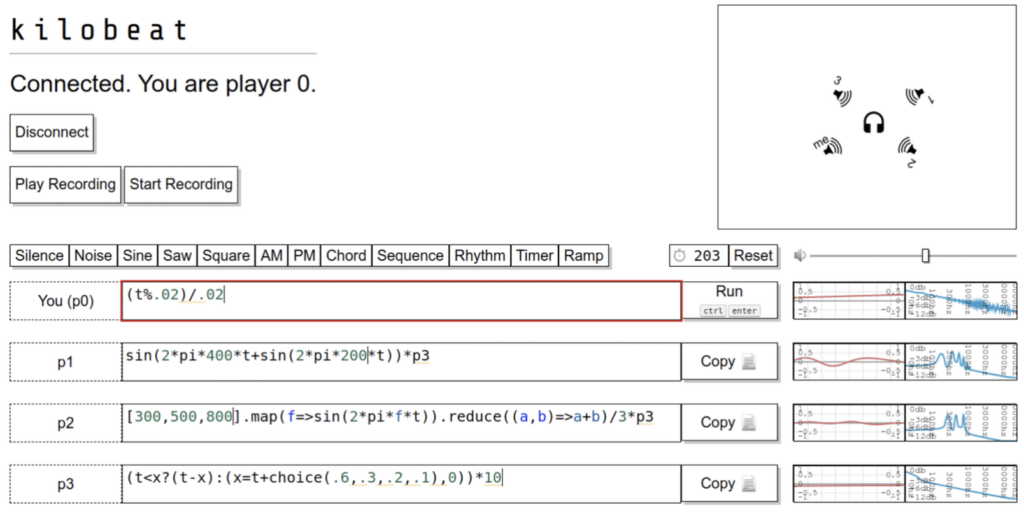

The coding platform I chose for this research project is Mercury. Mercury is a “minimal and human-readable language for the live coding of algorithmic electronic music.” It’s relatively new as it was created in 2019 and is inspired by many platforms that we have used or discussed before. Mercury has its own text-editor that supports sounds and visuals, however, since it’s made for music, I decided to dive deeper into that.

Process

Since the documentation on the music features in Mercury is not very thorough, it was better for me to learn and understand through example files and code that can be randomly chosen from the text-editor. I then went through the different sound samples and files available and tested them out on the online editor as it allowed me to comment out code and click and drag whenever I needed to. After reaching a final result that I liked, since it was the first time I tested these files, I started implementing similar code in the Mercury text editor. It did not sound exactly the same and so I made sure to make changes that would make it sound better.

One of the randomly chosen files that I came across had the following instruments and I used that for inspiration while changing it and adding more to it:

new sample kick_909 time(1/4)

new sample snare_909 time(1/2 1/4)

new sample hat_909 time(1/4 1/8)

new sample clap_909 time(1/2)

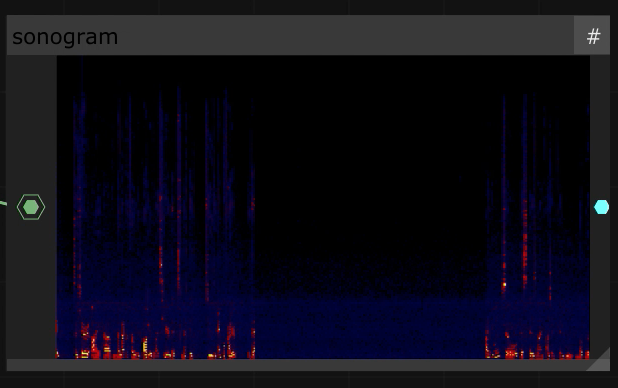

Below is a video of the “live coding performance” (that I had tested out):

Evaluation

Although Mercury had audio samples and effects that sounded really nice and allowed for experimentation as well as mix-and-matching, there were a few aspects that I am not a huge fan of. These include the limited documentation on the sounds and how they could be used, the fact that you can’t execute line by line but rather the whole file at a time, and the fact that you cannot use the cursor to move to another line or select text. However, it was an enjoyable software to explore and engaging when it comes to the text resizing as you type and reach the end of the line.