Group Members: Aalya, Alia, Xinyue, Yeji

Final Presentation

https://drive.google.com/file/d/14vo1y2KyfkNQ7snYH1QXRpyS5YRDYc8a/view

Video Link

Inspiration

The initial inspiration for our final project was different for the audio and the visuals. For the audio, we were inspired by our previous beat drop assignment and had a small segment that we wanted to use for the final and built on that. For the visuals, we wanted to have a colorful yet minimalistic output and so we started off with a colorful circle with black squiggly lines, and decided to create variations of it.

Who did what:

- Tidal: Aalya and Xinyue

- Hydra: Alia and Yeji

Project Progress

Tidal Process

For this project, we used a similar approach in developing our sounds. This began by laying out a few different audio samples and listening to them. Then we pieced together what sounds we thought fit best and that eventually turned into a specific theme for our performance. Once we set the foundation, it was then easier to break down the performance into a few main parts:

- Intro

- Verse

- Pre-Chorus / Build Up

- Chorus / Beat Drop

- Bridge / Transition to outro

- Outro

The next step in the music creating process was to figure out the intensity of the beats, and how we wanted specific parts to sound. How do we manipulate the sounds to have a more effective build up & beat drop, how do we make sure that the transitions are smooth and that the sounds aren’t choppy, etc… These are all questions that came up when we were in the brainstorming process.

Some of the more prominent melodies found in our music consisted of:

jux rev $ every 1 (slow 2) $ struct "t(3,8) t(5,8)" $ n "<0 1>" # s "supercomparator" # gain 0.9 # krush 1.3 # room 1.1

jux rev $ every 1 (slow 2) $ struct "t(3,8)" $ n "1" # s "supercomparator" # gain 0.9 # krush 1.3 # room 1.1 # up "-2 1 0" # pan (fast 4 sine) # leslie 8struct "t*4" $ n "1" # s "supercomparator" # gain 1.3 # krush 1.3 # room 1.1 # up "-2 1 0" # pan (fast 4 sine) # lpf (slow 4 (1000*sine + 100)) n "f'min ~ [df'maj ef'maj] f'min ~ ~ ~ ~" # s "superpiano" # gain 0.8 # room 2

Next, it was just a matter of developing the sounds further. For example, we had a specific melody that we particularly wanted to stand out, so in order to support it, we layered sounds and effects onto it until it developed into something we liked. That was the case for all the music parts. It was all about balance and smooth transitions, forming a cohesive auditory piece that also complimented and went well with the visuals.

Hydra Process

We started with a spinning colorful image (based on a color theme that we like) bounded by a circle shape. We then added noise to have moving squiggly lines at the center and decided to have that as our starting visual.

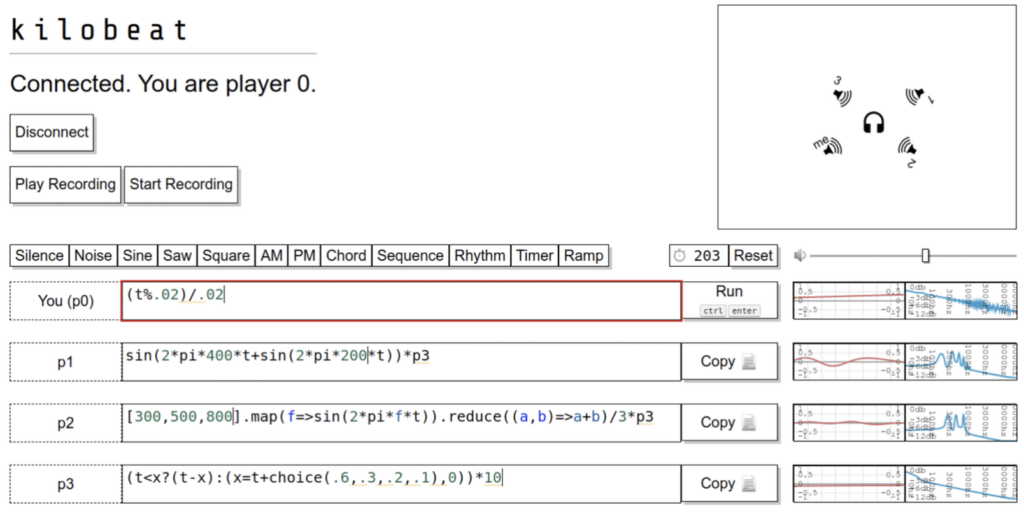

Alia and Yeji then started to experiment with this visual separately on different outputs and had all screens rendered so we can see them all at the same time and decide on what we like and what we don’t.

At first it was purely based on visuals and finding ones we liked before coordinating with Aalya and Xinyue to match the visuals to the beats in the audio.

We then started testing out taking different outputs as sources, adding functions to them, and playing around to make sure that the visuals are coherent when changed and the differences are not too drastic but also enough to match the beat change.

Next, we looked at the separate visuals one by one and deleted the ones we weren’t all agreeing on and left the ones we liked. By that time, Aalya and Xinyue were more or less done with the audios and we all had something to work with and put together.

This was more of a trial and error situation where we would improvise as they play the audios, see what matches, and basically map them to one another. Here, we also worked with the cc values to add onto the variations such as increasing the number of circles or blobs on the screen with the beat or creating pulsing effects.

We wanted the visuals to match up the build up of the audio. To do this, we tried to build up the complexity of the visuals from a simple circle to an oval, a kaleid, a fluid pattern, a zoomed out effect, a fast butterfly effect, ultimately transitioning into the drop visual. To keep the style consistent throughout the composition, we stuck to the same color scheme presented inside the circle from the very beginning. As the beat built up, we used a more intense, saturated color scheme, along with cc values that matched the faster beat.

now_drop = ()=> src(o0).modulate(noise(()=>cc[4]*5,()=>cc[0]*3)).rotate(()=>cc[4]*5+1, 0.9).repeatX(()=>-ccActual[1]+4).repeatY(()=>-ccActual[1]+4).blend(o0).color(()=>cc[4]*10,5,2)

now_drop().out(o1)

render(o1)

For the outro, we toned down the saturation back to its default, and silenced the cc values as we silenced each melody and beat, returning the visuals back to its original state. We further smoothed out the noise of the circle to indicate the proximity to the ending

src(o0).mult(osc(0,0,()=>Math.sin(time/1.5)+2 ) ).diff(noise(2,.4).brightness(0.1).contrast(1).mult(osc(9,0,13.95).rotate( ()=>time/2 )).rotate( ()=>time/2).mult( shape(100,()=>cc[2]*1,0.2).scale(1,.6,1) ).out(o0)

render(o0)

Evaluation and Challenges

The main challenge that we encountered in the development process of the performance was coordinating a time that worked for everyone and making sure that our ideas were communicated with each other to ensure that everyone was on the same page.

Another issue we had was that the cc values were not reflected properly, or in the same way for everyone, which resulted in inconsistent visual outputs that were not in sync with the audio for some of us while they were for others.

When Aalya and Xinyue were working on the music, although it was fast coming up with different beats, it took some time to put the pieces together into a complete and cohesive composition.

An aspect that worked very well was the distribution of work. Every part of the project was taken care of so that we had balanced visual and sound elements.

Overall, this project made us bond together as a group and fostered creativity. Combining our work to create something new was a rewarding experience, and with everyone being on the same page and working towards the same goal, it was even more rewarding. For our final performance, we accomplished something that we all could be proud of and were satisfied with the progress we had made. Everyone was in good spirits throughout the process, which helped to create an atmosphere of trust, collaboration, and creativity. This project allowed us to use our individual strengths for the collective benefit and gave us an opportunity to learn from each other in a fun environment.