Overview

The process of creating our final performance was a very hectic but rewarding experience! We had a lot of fun working together and combining all our ideas to create one cohesive piece. We experienced several challenges along the way, such as difficulty matching the visuals with the sounds, and also combining the buildups with the beat drops in a way that makes sense, but we got there in the end! We found it hard to split the work into sections and then each working on our sections, so our approach to creating our final piece was to work on everything together. For example, Louis took charge of creating the music, so Shengyang and Debbie would support Louis during this process and offer feedback instead of working on other things (Debbie took charge of Hydra and midi while Shengyang took charge of p5.js). This definitely prolonged the process of creating the final performance, but we found that working this way made the final result feel more cohesive. If we could work on this project again, we would first choose a theme so that we’d be able to split the work into sections and successfully combine our work to make a cohesive piece. Overall, we are very proud of how far we have come! It was an amazing experience working with the team, everyone in the class, and Professor Aaron! We learned a lot of skills that we will continue to use in the future!

Debbie’s part:

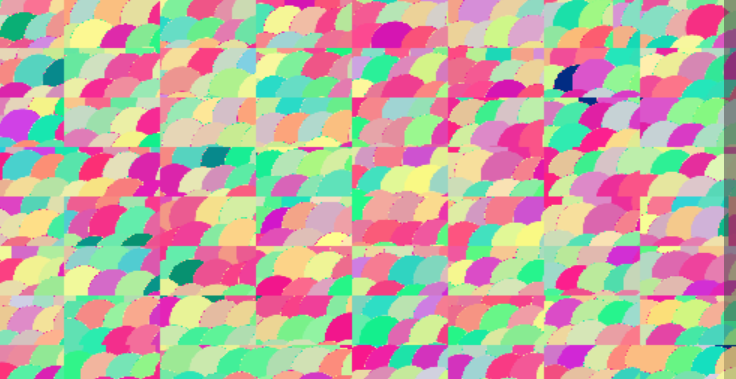

For our final performance, I focused mainly on creating visuals (with Shengyang) and tying the whole piece together. Initially, I created the following visual with p5.js.

I really liked this visual because I liked how the size of the hole in the centre could be manipulated to mimic the vibrations on a speaker, or even a beating heart. But my issue with it was that I found it difficult visualising what I could do with the visual other than changing the size of the hole. I tried changing the shapes from squares to something else or combining them with the hydra, but nothing felt right. I wanted to create a visual that could be used throughout the whole piece and changed in unique but cohesive ways. So, a week before the performance, I decided to change the visuals from this to the following:

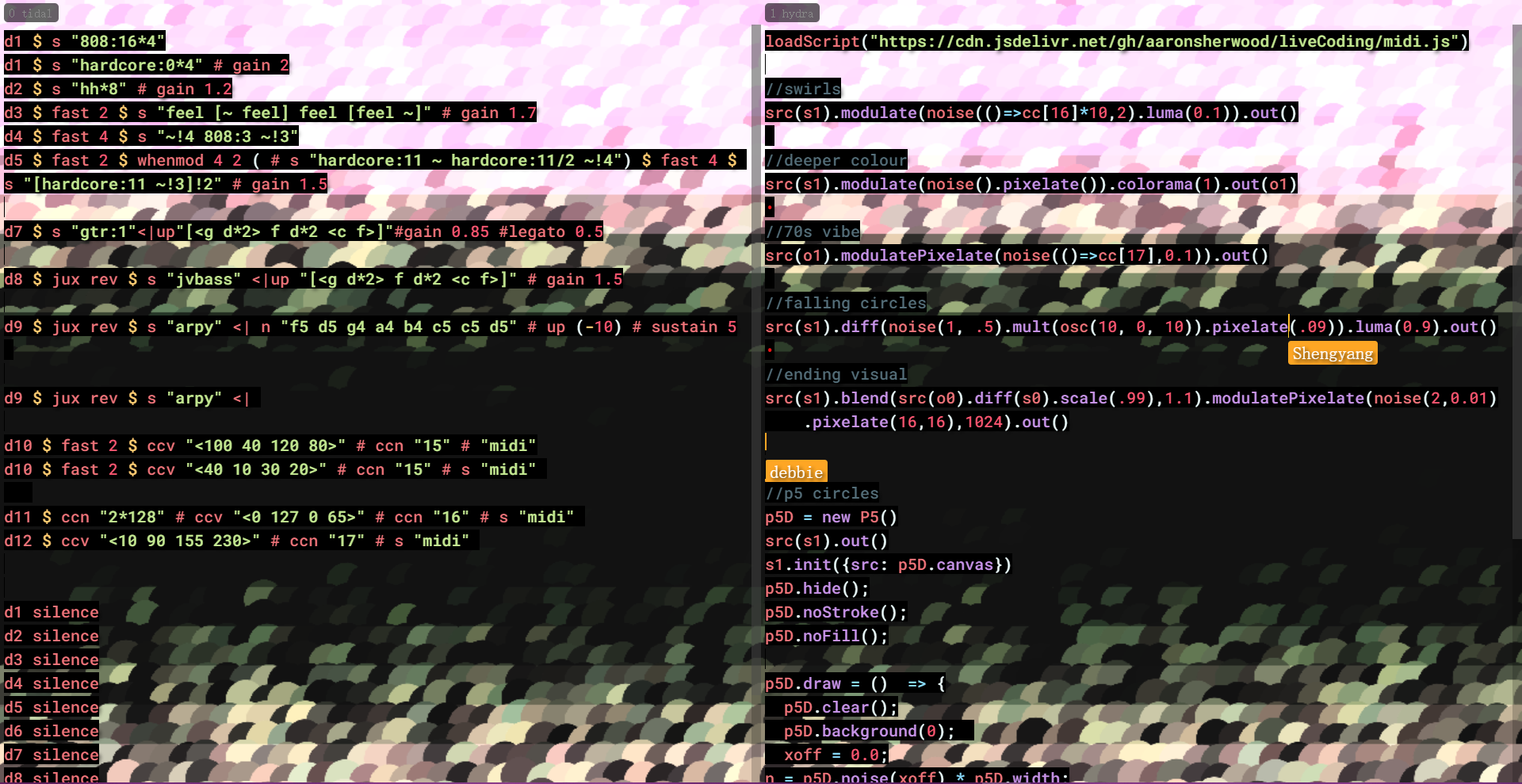

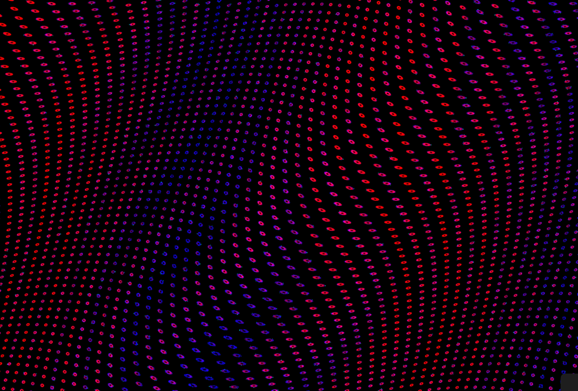

What I loved about this visual is that the code was so straightforward, and little changes to it could change the visual in huge ways that still remained cohesive. This visual was made completely with Hydra. Here is a snippet of the code:

osc(215, 0.1, 20)

.modulate(

osc(2, -0.3, 100)

.rotate(15)

)

.color(0.9, 0.0, 0.9 )

.modulate(

osc(6, -0.1 )

.rotate(9)

)

.add(

osc(10, -0.9, 900 )

.color(1,0,1)

)

.mult(

shape(900, (()=>cc[1]/4), 0.5)

.luma()

.repeatX(()=>cc[3]*100)

.repeatY(()=>cc[3]*100)

.colorama(10)

)

.modulate(

osc(9, -0.3, 900)

.rotate(()=>cc[0])

)

.out(o0)

Louis’ part:

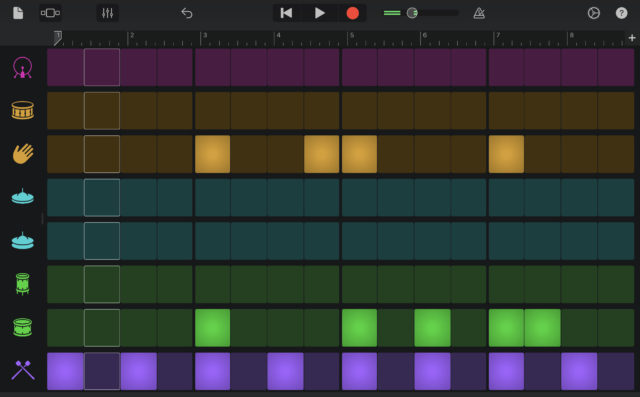

Like Drum Circle, I basically take charge of the music part of our final work. This is because personally speaking, I am more enthusiastic about making music and have also accumulated some experience creating music via Tidal. In the process of making this final piece, I was inspired by other works, including some works on Garageband (very brilliant works they are but are quite difficult to be realized by using Tidal), the beat-box videos Aaron recommended we watch, and of course our own works (namely the Drum Circle). For me, the most critical parts of this work are the two drops. We spend a lot of time making the two drops to have a good effect of carrying on the top and starting the follow-ups. Take the second drop as an example, we crossed-use the two main melodies (one is the main motif of the main work, and the other one is the melody played at the end. By doing this, I believe we have managed to tie the previous content together and enhance the rhythm of the drop section very effectively (based on the reaction of the audience during the live performance, this section should be quite successful).

I want to thank my two lovely teammates Shengyang and Debbie, they gave a lot of useful advice on how to make the melody as well as make the whole structure of the music part make sense. I also want to thank Aaron for providing great suggestions on improving especially the first drop (at first we have a 16-shot 808 which makes itself more like the drop part than the real one).

Without their help, I won’t be able to realize this work alone.

Shenyang’s part:

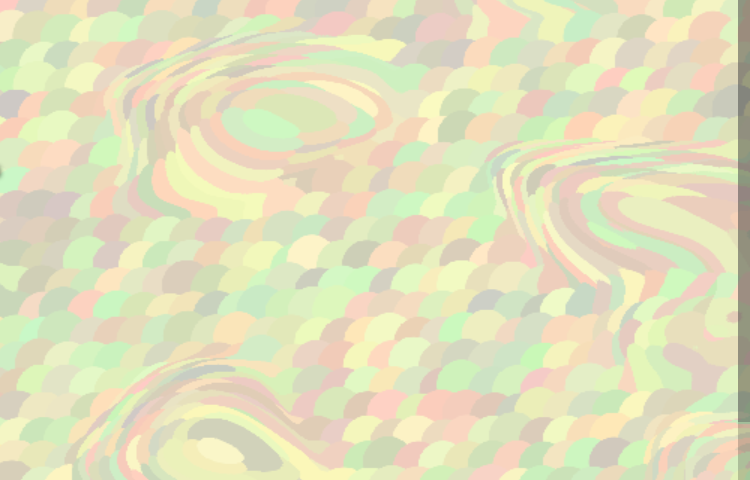

In the final project, I am mainly responsible for the production of the p5.js part. I was also involved in some musical improvements and a part of the abandoned visuals. I will mainly talk about the p5.js part we realized and put in the final performance. First, we used laser beams, which are realized in p5.js. This effect is added to the top layer of the original hydra section in the second half of the show. This is a screenshot of a combination of the laser beams from p5 and hydra.

The laser beam effect is actually inspired by the MV of A L I E N S by Coldplay that Louis showed us, you can find the MV here, and the laser beam appears at about 2:30. This is the laser beam in the MV:

I used a class to store the location and direction of each beam:

class RayLP {

constructor(x, y, startAngle) {

this.originX = x;

this.originY = y;

this.distance = 0;

this.angle = startAngle;

this.stillOnScreen = true;

this.speed = 1;

this.length = p2.floor(p2.random(8,17));

this.color = p2.random(0,1);

}

move() {

this.distance = this.distance + this.speed;

this.speed = this.speed + this.distance / 350 + ccActual[9];

}

check() {

this.stillOnScreen = (this.distance < width / 2);

}

destroy() {

delete(this);

}

show() {

p2.push();// remember the fill and stroke before

if(this.color > 0.5){

p2.stroke(220,65,255, 180 - this.distance);}//, 255 - this.distance * 2);}

else{p2.stroke(139,0,50);}

p2.strokeWeight(9);

if (this.stillOnScreen) {

for (var i = 0; i < this.length; i++) {

var x = this.originX + (this.distance + i *2) * p2.cos(this.angle);

var y = this.originY + (this.distance + i *2) * p2.sin(this.angle);

var xTo = this.originX + (this.distance + i * 10 ) * p2.cos(this.angle);

var yTo = this.originY + (this.distance + i * 10 ) * p2.sin(this.angle);

p2.line(x,y,xTo,yTo);

}

}

else{this.destroy();}

p2.pop(); //restore fill and stroke

}

}

And in the p5 draw function, each frame the program will show and check, and push new laser beams into a list. We finally changed the color/stroke to dark purple and dark red just like the background. After building the hydra function on the laser beam effect, it can twist like the background dots, which makes the effect less abrupt.

Notably, I had some silly errors in my p5 code, and Omar gave me some very inspiring advice. Thanks a lot for that! Also, there was no delete(this) part in the class code at the beginning, which doesn’t cause any obvious problems in the p5 editor. But when migrated to hydra, whether used in Atom or in Flok, it quickly fills up memory. This can make the platform stuck or slow to respond.

I was also in charge of realizing the visuals for the surprise Merry Christmas session at the end. This is migrated from the Examples of p5.js that can be found here.

The syntax for declaring classes this way doesn’t seem to be very acceptable in hydra, so I’ve replaced it with a more common way of writing it that is acceptable to the hydra.

I really enjoyed the class, very intellectually stimulating. Thank you so much to professor Aaron, my teammates, and my lovely classmates! I will miss you guys!

You can find videos of our performances here:

Last but not least:

once $ s “xmas” # gain 1.2 # room 1.5