I found the reading interesting in how it shows how the “live” component of live coding is gradually evolving. I think there are many unexplored avenues in which live coding can be intersected with other forms of media, and I’m excited to explore these ideas throughout the rest of the semester as part of the final project. Through this form of self-expression, I think we can also continue to blur the boundary between person and machine and perform as a singular entity, and see how computing can give way to a wider variety of art forms.

Hydra (Nicholas):

We decided to give our drum circle project a floaty underwater-esque energy. When messing around with video inputs, I found a GIF of jellyfish swimming in the Hydra docs as a starting point.

One of the main things I hated about this GIF was the fact that it didn’t create a perfect loop. Having it as the focal point of the visual part of the performance didn’t look good. As a result, I decided to put it in a wave of color derived from an oscillator with many types of modulation applied.

Having the colors come over the screen in a wave allowed for the looping to be more seamless and created the opportunity for us to add something in the background. Buzz Lightyear was the perfect candidate for this because of this perfectly looping, ominous, and a bit funny GIF.

Placing the sad Buzz Lightyear GIF below the flood of colors adds to Buzz’s expression of hopelessness. I found this a bit funny, and by effectively combining 2 different sources to manipulate, I think it logistically also gave more opportunities to mess around with and improvise during the performance.

Tidalcycles (Ian, Chenxuan, Bato):

Rather than splitting our roles into strict parts, we decided to freely work on the audio together and see what came out of it. This unrestrained kind of jamming had yielded satisfactory results for our previous meetings, and we thought it would be best to run with what had been effective for us. That’s the spirit of live coding, after all—spontaneous inspiration!

We started by laying out a simple combination of chords alternating between two patterns using the supersaw synth, which sounded quite airy and gave off house music vibes. Ian added a nice panning effect to it, which gave it a sense of dimension and made it sound more dynamic. On top of this, we added a catchy melody that fit with the chords using the “arpy” sample, which was then made glitchy with the jux (striate) function.

We also experimented with a few drum/bass patterns that went along with the melodies. An attempt that went particularly well saw us pair the “drum” samples with a random number generator AND a low pass filter with a sine oscillator. This combination allowed for a lot of constant variation in both the literal and spatial sound for the drums, which kept things adequately erratic and exciting.

We tried to match the atmosphere of the audio with the visuals. This meant that we chose instruments that fit a more floaty-ish vibe—the supersaw synth was a surprisingly decent choice—and gave it more of that underwater feel by adding some reverb (the “room” effect) and audial dimension (the “pan” effect). The drum line with the oscillating lpf values also lent the overall audio a fluid, mesmerizing quality that we felt went along with the aquatic theme.

The reading reminded me of Allan Kaprow’s Happenings because it seems like multimedia artists focus more on the overall experience of an art piece rather than perfecting individual elements. In engaging multiple senses through a variety of artistic mediums, the end result can be more engaging and pull in the person experiencing the piece. I also see this reflected in Live Coding as we use both audio and visuals to create an all-encompassing experience, with the improvisational nature of the Live Coding medium moving our notion of what an art piece is away from a perfected sequence of events to a more holistic experience. I hope to keep making my Live Coding pieces through this high-level view, focusing on the person’s overall experience of the performance.

I found the reading’s discussion on algorithms to be quite interesting, especially when applied to something artistic such as music. My intuition is to associate algorithms with numbers, but through our class, it’s evident that there are multiple ways for us to interact with sound algorithmically. When applying principles of computing to the arts, it becomes clear that there is a strong connection between how composers design repeating patterns and how we interact with TidalCycles. However, we have the added benefit of being able to connect to other digital mediums too. I’m quite interested to see how our Live Coding performances can pull from other digital sources, such as web-based interactions or some creative interpretation of large datasets.

I wanted to blend old and new Japan via some connecting thread through the composition project. I decided to settle on the koto, a Japanese string instrument, for my principal sound. I got a YouTube video and trimmed it to a singular note in Audacity, then imported it into TidalCycles.

When making the melody for the first act, I tried to incorporate a lot of trills and filler notes as is common in many koto songs by breaking up each note into 16th notes and offsetting high and low pitch sounds. For the visuals, I decided to settle on developing a geometric pattern that felt Japanese as the music progressed. I did this by creating thin rectangles, rotating them, and adding a mask using a rectangle that expanded outwards, revealing pieces of the pattern at a time.

shape(2,0.01,0).rotate(Math.PI/2).repeat(VERT_REP).mask(shape(2,()=>show_1,0).scrollY(0.5))For the transition, I added the typical Japanese train announcement that signified the shifting of time period, but I didn’t get the transition down as smoothly as I hoped. My initial idea was to add train doors closing and for the whole scene to shift, but I couldn’t get the visuals to work as I wanted to. Looking back, I would add the sound of a train moving and the visuals shifting to create the next scene.

For the second scene, I wanted to show the new era of Japan, aka walking around Shibuya woozy and boozy as hell. I got a video of someone walking through Tokyo, and slapped on color filters as well as distortion. I tried to get hands to better replicate the first person perspective, but I the static png didn’t end up being very good.

src(s0)

.color(()=>colors[curr_val][0], ()=>colors[curr_val][1], 5)

.scale(1.05)

.modulateRotate(osc(1,0.5,0).kaleid(50).scale(0.5),()=>begin_rotate?0.1:0.05,0)

.modulatePixelate(noise(0.02), ()=>begin_bug?7500:10000000)

.add(

src(s1) // za hando

.rotate(() => (Math.sin(time/2.5)*0.1)%360)

.scale(()=> 1 + 0.05 * (Math.sin(time/4) + 1.5))

)

.modulateScale(osc(4,-0.15,0).kaleid(50),()=>brightness*4) // za warudo

.blend(src(o2).add(src(s3)).modulatePixelate(noise(0.03), 2000), ()=>brightness)

.blend(solid([0,0,0]), ()=>(1-total_brightness))

.out()

I controlled for the modulation amounts via MIDI and added the JoJo time stop sound effect to alleviate and reintroduce tension. I also made the sound slow down and reverse when stopping the music.

d2 $ qtrigger $ seqP [

(15, 16, secondMelody 1 0 1),

(15, 16, secondMelody 2 0 1),

(15, 16, bass 0 0.5),

(15, 16, alarm 0),

(15, 16, announcement 0)

] # speed (smooth "1 -1");

d3 $ qtrigger $ seqP [

(15, 16, s "custom:8" # gain 1.75 # cut 1), -- stop time

(18, 19, s "custom:9" # gain 0.75 # lpf 5000 # gain 2 # room 0.1 # cut 1 # speed 0.75) -- start time

];

d4 $ qtrigger $ seqP [

(18, 23, secondMelody 1 1 1),

(18, 23, secondMelody 4 1 1),

(18, 23, bass 1 1),

(18, 23, alarm 1),

(18, 23, announcement 1)

] # speed (slow 6 $ smooth "-1 1 1 1 1 1");I found the reading made me critically consider how complex live coding is, especially in relation to software engineering. Having a SWE background myself, I primarily saw programming as a way to fulfill requirements and the trial and error involved were always roadblocks to the end goal of creating a program that meets certain specifications. However, working in Hydra and TidalCycles, I found myself agreeing with the idea that the process of trial and error leads to roadblocks but also innovation. I may have a certain sound I’m trying to create, but in trying to do so, I may create a completely different sound I enjoy and incorporate into my final piece. The idea of no-how adds to this, with uncertainty of how something might look or sound comes new innovation and avenues of artistic expression.

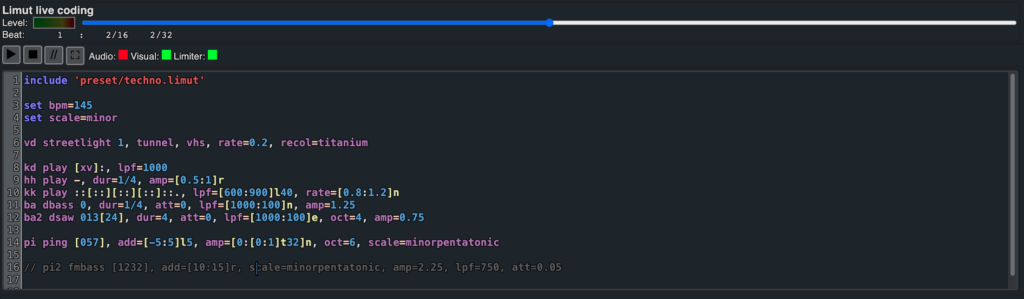

I chose to learn a bit about the LiMuT platform for the research project. It’s a web-based live coding platform built on JavaScript, WebGL, and WebAudio with a syntax similar to Tidalcycles. The platform, according to its creator, Stephen Clibbery, was inspired by FoxDot, a Python-based live coding platform. He started it as a personal project in 2019, and since he’s been the sole contributor to the project, the documentation is not as developed as other platforms. While I reached out to him through LinkedIn to gather more insight into the platform, he hasn’t responded as of yet.

The strength of the platform is definitely in its ease of setup since any modern browser can run the platform at https://sdclibbery.github.io/limut/. The platform supports visuals and audio, but there aren’t many ways to link the two up. Many of the visual tools are set, and there isn’t a lot of customization that can be done. However, LiMuT is nonetheless a powerful live coding tool that enables the user to perform flexibly. I decided to explore by diving into the documentation and tweaking bits and pieces of the examples, looking into how each piece interacts with each other.