Group Members: Aalya, Alia, Xinyue, Yeji

Inspiration

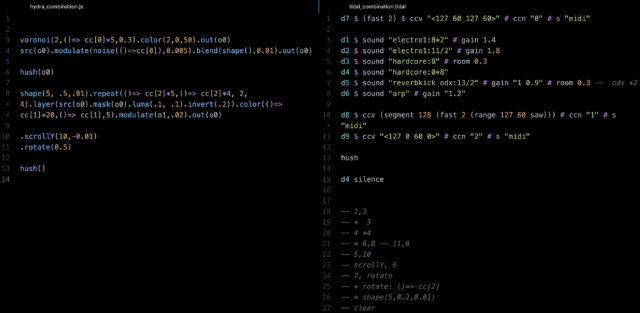

Each of us brought a few visuals and sound samples together. Then we tried to adjust the parameters and midi so that the theme of the visuals and sounds matched with each other.

Who did what:

- Tidal: Aalya and Alia

- Hydra: Xinyue and Yeji

Project Progress

Tidal Process

- First, we played around with the different audio samples in the “dirt samples” library and tested out code from the default samples table on the documentation on the official Tidal website.

- We then mixed and matched several combinations and eliminated everything that didn’t sound as appealing as we wanted it to be.

- As Yeji and Xinyue were working on the visuals on the same flok, we listened to all of the various soundtracks as we took the visuals into account and were able to narrow down all our ideas into one singular theme.

We had a lot of samples that we thought worked well together, but we were lacking structure in our track, so that was the next step when working on the project. - We broke down the composition into four sections: Intro, Phase 1, Phase 2, and Ending.

- After that, it was just about layering the audio in a way that sounded appealing and that transitioned smoothly. We were trying to figure out what sounds would be used when transitioning into a new section and what parts would be silenced. It was all about finding the balance and a matter of putting together tracks that worked well with the visuals and complimented them.

Hydra Process

- We started with a spinning black and white image bounded by a circle shape. Our inspiration comes from the old-fashioned black-and-white movie.

- We modified the small circle by adding Colorama, which introduces noise

- .luma() introduced a cartoon effect to the circle, and reduced the airy outlines

- The three other outputs depended on the o0 as the source.

- We added an oscillation effect on top of the original source and added cc to create a slowly moving oscillating effect that goes with the music.

- Then, the scale was minimized for a zoom-out effect as the intensity of the music built up.

- For the final output, the color was intensified, along with another scale-down effect, before introducing a loophole effect that transitioned back into o0 for the ending scene.

Evaluation and Challenges

- Some of the challenges that we encountered during the development process of the performance were coordinating a time that worked for everyone and making sure that our ideas were communicated with each other to ensure that everyone was on the same page.

- Another issue we had was that the cc values were not reflected properly, or in the same way for everyone, which resulted in inconsistent visual outputs that weren’t in sync with the audio.

- Something that worked very well was the distribution of work. Every part of the project was taken care of so that we had balanced visual and sound elements.